1Proteins¶

Let’s consider a 100-residue peptide chain with a unique folded state. If each amino acid had only two available conformations, the number of configurations available to the chain would be . If the time required to switch between any two configurations was 10-12 s (a picosecond), and we assume that no configuration is visited twice, it would take approximately 1018 seconds to explore all the “phase space” (and therefore, on average, to fold): this is close to the age of the universe! In reality, proteins fold on time scales ranging from microseconds to hours. This is the gist of the famous “Levinthal’s paradox”, which implies that the search for the folded structure does not happen randomly, but it is guided by the energy surface of residue-residue interactions.

Proteins can be denatured by changing the solution conditions. Very high or low temperature and pH, or high concentration of a denaturant (like urea or guanine dihydrochloride) can “unfold” a protein, which loses its solid-like, native structure, as well as its ability to perform its biological function. Investigations of the unfolding of small globular proteins showed that, as the denaturing agent (e.g. temperature or denaturant concentration) increases, many of the properties of the protein go through an “S-shaped” change, which is characteristic of cooperative transitions.

Furthermore, calorimetric studies show that the denaturation transition is an “all-or-none” transition, i.e. that the “melting unit” of the transition is the protein as a whole, and not some subparts. This of course applies to single-domain small proteins, or to the single domains of larger proteins. Here “all-or-none” means that the protein exist only in one of two states (native or denaturated), with all the other “intermediate” states being essentially unpopulated at equilibrium. Therefore, this transition is the microscopic equivalent of a first-order phase transition (e.g. boiling or melting) occurring in a macroscopic system. Of course, since proteins are finite systems and therefore very far from the thermodynamic limit, this is not a true phase transition, as the energy jump is continuous, and the transition width is finite.

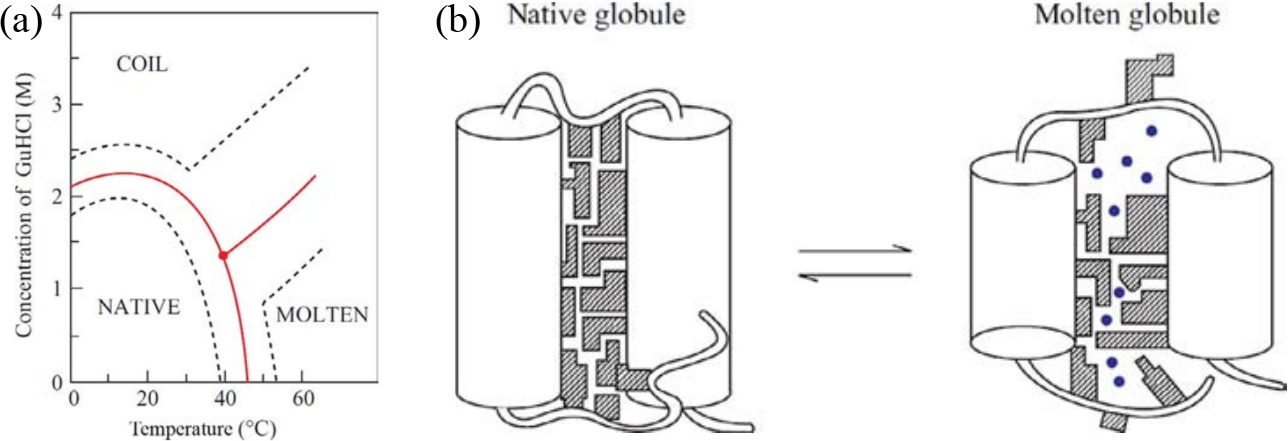

Figure 1:(a) Phase diagram of the conformational states of lysozyme at pH 1.7 as a function of denaturant (guanine dihydrochloride) concentration and temperature. The red lines correspond to the mid transition, the dashed lines outline the transition zones. (b) Schematic model of the native and molten globule protein states. Here the protein consists of only two helices connected by a loop, and the side chains are shown as shaded regions. Adapted from Finkelstein & Ptitsyn (2002).

But how does the denatured state look like? As discovered by using a plethora of experimental methods, which often seemed contradicting each other, the answer depends on the denaturing conditions. Figure 1(a) shows the phase diagram of lysozyme (a globular small protein) for different temperatures and concentrations of a denaturing agent. Increasing the latter leads to a transition to a disordered coil state where essentially no secondary structure is present. By contrast, the effect of temperature is qualitatively different, as the protein partially melts in a state (the molten globule, MG) that retains most of its secondary structure, but it is not solid like and it cannot perform any biological function. A schematic of the difference between the native and molten globule states is shown in Figure 1(b).

The cooperative transition that leads to the molten globule is due to the high packing of the side chains, which is energetically favourable but entropically penalised, since it impedes the small-scale backbone fluctuations that trap many of the internal degrees of freedom of the protein. The liberation of these small-scale fluctations, and the resulting entropy gain, requires a slight degree of swelling, which is why the MG is not much larger than the native state. However, such a swelling is large enough to greatly decrease the van der Waals attractions, which are strongly dependent on the distance, and to let solvent (water) molecules in the core.

In general, not all proteins behave like this, as there exist some (usually small) proteins that unfold directly into a coil, others that form the molten globule under the effect of specific denaturants, and coils under the effect of others, etc. However, the unfolding transition has nearly always an “all-or-none”, cooperative nature.

Generally speaking, the denaturation of a protein is a reversible process, provided that some experimental conditions are met (the protein is not too large, its chemical structure has not been altered by the denaturation process or by post-translational modifications, etc.). The fact that protein folding and unfolding is a reversible process implies that all the information required to build a protein is stored in its sequence, and that its native structure is thermodynamically stable. Therefore, the folding process can be, in principle, understood by using thermodynamics and statistical mechanics. The sequence structure hypothesis is sometimes called Anfisen’s dogma, named after the Nobel Prize laureate Christian B. Anfisen, whose studies on refolding of ribonuclease were key to establish the link between “the amino acid sequence and the biologically active conformation” (Anfinsen et al. (1961), Anfinsen (1973)).

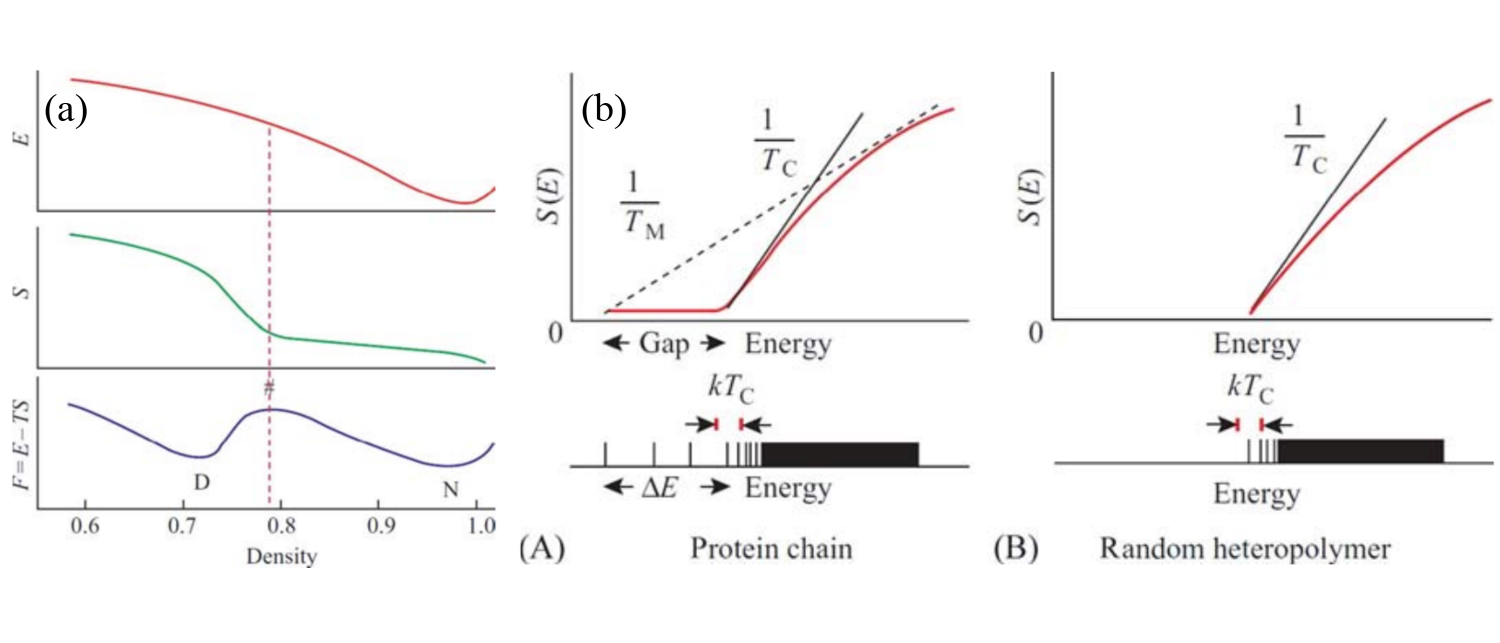

Figure 2:(a) Typical curves for the energy, entropy and free-energy of a protein as functions of the (intramolecular) density. The D and N letters mark the positions of the denatured and native states, respectively. The hash pound marks the position of the maximum of the free-energy barrier. (b) A sketch of the energy spectra (bottom panels) and associated curves (top panels) for (A) a protein and (B) a random heteropolymer. Adapted from Finkelstein & Ptitsyn (2002).

Figure 2(a) shows the origin of the “all-or-none” transition observed in protein denaturation in thermodynamic terms. Here I will use the description given by Finkelstein & Ptitsyn (2002) (whence the figure comes), almost word by word. The panel shows the energy and the entropy of a protein as a function of its internal density (in some arbitrary units). The energy is at its minimum at close packing, while the entropy S increases with decreasing density. Initially, this increase is slow, since the side chains can only vibrate, but their rotational isomerization is impossible. When the density decreases enough that the latter becomes possible the increase is much steeper. Finally, when free isomerization has been reached the entropy growth becomes slow again.

The nonuniform growth of the entropy is due to the following. The isomerization needs some vacant volume near each side group, and this volume must be at least as large as a group. And, since the side chain is attached to the rigid backbone, this vacant volume cannot appear near one side chain only but has to appear near many side chains at once. Therefore, there exists a threshold (barrier) value of the globule’s density after which the entropy starts to grow rapidly with increasing volume (decreasing density) of the globule. This density is rather high, about of the density of the native globule, since the volume of the group amounts to of the volume of an amino acid residue. Therefore, the dependence of the resulting free energy on the globule’s density has a maximum (the so-called free-energy barrier) separating the native, tightly packed globule (N) from the denatured state (D) of lower density. It is because of the presence of this free energy barrier that protein denaturation occurs as an “all-or-none” transition akin to melting or nucleation, independent of the final state of the denatured protein.

It is useful, when talking about proteins, to also introduce the concept of random heteropolymers, which are polymers with sequences that have not been selected by nature, and therefore have many (degenerate or quasi-degenerate) ground states rather than a single native conformation. Figure 2(b) shows the qualitative difference between a protein and a random heteropolymer. The bottom panels of the figure show the typical energy spectra of the two chains, where each line corresponds to a single conformation. At high energy both spectra are essentially continuous. However, the low-energy, discrete parts look very different: the energy difference between the ground state and the continuous part of the spectrum, where the majority of the “misfolded” states lie, is much larger in a protein than in a random heteropolymer. It is this energy gap that creates the free-energy barrier of Figure 2(a).

We can also define the entropy , which is proportional to the logarithm of the number of structures having energy , and the temperature corresponding to the energy , implicitly defined by the relation . As sketched in Figure 2(b), the peculiar shape of the protein energy spectrum gives raise to a concave part of , which means that there is a temperature at which the lowest-energy, native conformation coexists with many high-energy (denatured) ones. In this simple picture, this is the melting temperature . This temperature should be compared with the so-called “glass temperature” , called in the figure, which is the temperature associated to the lowest energy of the continuous band of the spectrum. Above the chain behaves, from the kinetic point of view, like a viscous liquid, while below the glass temperature the behaviour is glassy-like (i.e. non-Arrenhius dependence on temperature, aging, non ergodicity, etc.).

If , the slow dynamics asssociated to the glass state makes it kinetically impossible to reach the ground state, and the chain remains trapped in higher-energy misfolded states. By contrast, if , folding is possible, and results obtained with minimalistic models show that the fraction is a good proxy for “foldability”: the larger this fraction, the faster the protein folds.

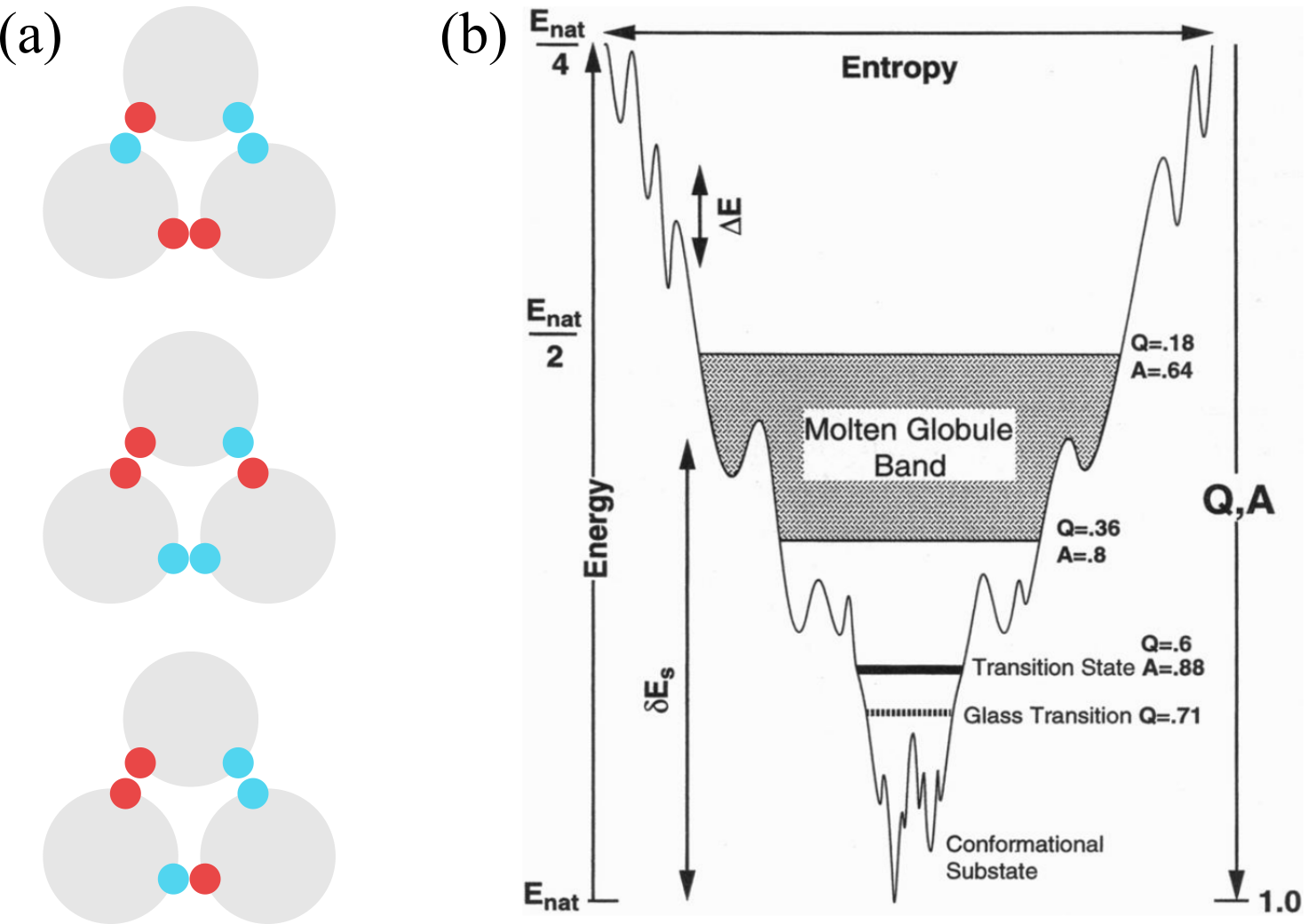

Figure 3:(a) An example of frustrated interactions: if the particle-particle interaction is such that only like colours want to be close to each other, there is no way of arranging the three spheres so that all favourable contacts are made. As a result, the ground state is degenerate. (b) A schematic energy landscape of a 60 amino-acid helical protein. and are the fractions of correct dihedral angles in the backbone and correct native-like contacts, respectively, is the “ruggedness” of the landscape, and is the energy gap. Taken from Onuchic et al. (1995).

All these concepts are not merely qualitative, but have been grounded in theory by using sophisticated statistical mechanics approaches that have generated the “funnel landscape” folding picture, which is schematically shown in Figure 3(b).

The basic idea is to leverage the concept of frustration, which happens when the interactions between the different parts of a system are such that they cannot be satisfied, from the energetic point of view, all at the same time. If this is the case, then there is no single ground state, but rather many low-energy stable states that, in a many-body system, are uncorrelated from each other and separated by (possibly large) energy barriers. An example is provided in Figure 3(a). The resulting “energy surface” (or landscape) is said to be rugged (or rough), and generates a dynamics where the system sits in a valley for a long time before being able to jump over the barrier and move to a different stable conformation. This is a hallmark of glassiness.

To draw a parallel with proteins, we can consider a random heteropolymer, where the interactions among the different amino acids will be frustrated, blocking the system from finding a single well isolated folded structure of minimum energy. A candidate principle for selecting functional sequences is thus the minimization of this frustration. Of course even in the absence of frustration, there are still energetic barriers on the path towards the native conformation due to local structural rearrangements which still give raise to a rugged landscape, slowing down the kinetics towards the folded state. This scenario has come to be called a folding “funnel”, emphasizing that there is a single dominant valley in the energy landscape, into which all initial configurations of the system will be drawn.

A classic funnel diagram, such as the one shown in Figure 3(b), represents an energy-entropy landscape, with the width being a measure of the entropy, whereas the depth represents both the energy and two correlated order parameters and , which are the fractions of native contacts and correct dihedral angles in the protein backbone. Although in reality the landscape is multidimensional, here the projection attempts to retain its main features, such as the barrier heights, which are a measure of the “ruggedness” or “roughness” of the landscape. The typical height of these barriers, together with the energy gap and the number of available conformations, are the three main parameters of the Random Energy Model, which is one of the main theoretical tools in this context.

1.1The Zimm-Bragg model for the helix-to-coil transition¶

As we discussed, polypeptides can, in general, be reversibly denatured (with temperature or pH, for instance). Here we theoretically investigate this phenomenon in the context of secondary structure with the Zimm-Bragg model, which is a toy model for a chain that can exhibit a continuous coil-to-helix transition. Note that it is possible to synthesise polypeptide chains that undergo such a transition, see e.g. Doty & Yang (1956).

Consider a polymer chain composed of residues. Each residue can be in one of two states, “coil” (c) or “helix” (h), so that a microscopic state of the chain can be expressed as a one-dimensional sequence, like ccchhhhhcchhhchcc. In equilibrium, the probability (or statistical weight) to observe a given chain microstate is just the Boltzmann factor, , where is the free-energy cost of the microstate, so that the total (configurational) partition function is

Since (free) energies are always defined up to a constant, we define the free-energy of a coil of any length to be zero by definition, so that the statistical weight of a coil residue is always 1. By contrast, forming a sufficiently long helical segment should be advantageous, otherwise no transition would occur. However, we know that in the helices formed by polypeptides, each -th amino acid is hydrogen-bonded to the -th, where for a -helix. As a consequence, since one full helical turn needs to be immobilised before seeing any benefit from the formation of hydrogen bonds, the formation of a helix incurs a large entropic penalty that needs to be paid every time a coil segment turns into a helical one, . However, once a helix is formed and the initial price has been paid[1], adding an H contributes a fixed amount to the helix free energy. As a result, the free-energy contribution of a helical stretch of size is , so that is statistical weight is

where we have defined the cooperativity (or initiation) parameter, , and the helix-elongation (or hydrogen-bonding) parameter, . Note that, since is purely entropic, and therefore does not depend on temperature.

Following Finkelstein & Ptitsyn (2016), we define two accessory quantities: is the partition function of a chain of size , where the last monomer is in the coil state, and is the partition function of a chain of size , where the last monomer is in the helix state. With this definition, the partition function of the chain is . We can explicitly compute the first terms:

For , the sequence is either

corh, so that and .For , we have two sequences ending with c, which are

hcandcc, and two sequences ending with h, which arehhandch. Each partition function is given by a sum of the statistical weights of the allowed microstates, so that we have and .For , there are 8 available sequences, which are built by taking each sequence and adding either

corhat its end. Each of the resulting sequence will have a statistical weight that is that of the base sequence, multiplied by 1 if the sequence ends withc, and by or if the sequence ends withhand the preceeding character ishorc, respectively. Summing up the contribution we obtain and .

In general the operations carried out to compute the partial partition functions can be applied to any other value of , meaning that we can write down recursive relationships connecting and to and . These relationships can be neatly expressed in matricial form:

It is convenient to define the matrix

so that the total partition function of a chain of monomers can be written as[2]

where the first vector-matrix multiplication on the right-hand side gives , which multiplied by the column vector yields . The partition function can be written in terms of the eigenvalues of if we note that

where , and and (with ) are the eigenvalues of , which can be found by solving the secular equation, viz.:

whence

Note that the two eigenvalues are linked by the relation .

Since they can be of any length, the two eigenvectors and of a 2x2 matrix each have a single independent element, (where ), which is given by

We obtain and , and therefore and . The two eigenvectors are used to construct the diagonalising matrix and its inverse[3]

We can now write Eq. (7) explicitly in terms of and :

From the partition function we can compute any observable we need. The most interesting quantity is the average fraction of residues that are in the helical state h, which can be compared to experiments. First of all, we note from the and we explicitly calculated and from Eq. (5) that the partition function is a polynomial in , and therefore can be written as

where are coefficients that do not depend on , but only on and on the multiplicity of each state with helical residues. Therefore, by definition the probability that the chain has exactly helical residues is , which means that the average number of helical residues is

since and only depends on . Defining the fraction of helical residues, , and exploiting the known property of logarithms we find

Substituting Eq. (13) it is possible to write in the following closed, albeit rather cumbersome, form :

For sufficiently long chains, and the expression can be greatly simplified:

The model as described and solved takes into account the cooperativity of the transition through the parameter . It is instructive to look at the non-cooperative solution, which can be obtained by setting . In this case and , so that

which is independent of and describes the simple chemical equilibrium of a reaction. Indeed, defining the equilibrium constant , where is the concentration of , we find that the fraction of residues in the helical state is

which, if compared with Eq. (19), shows that .

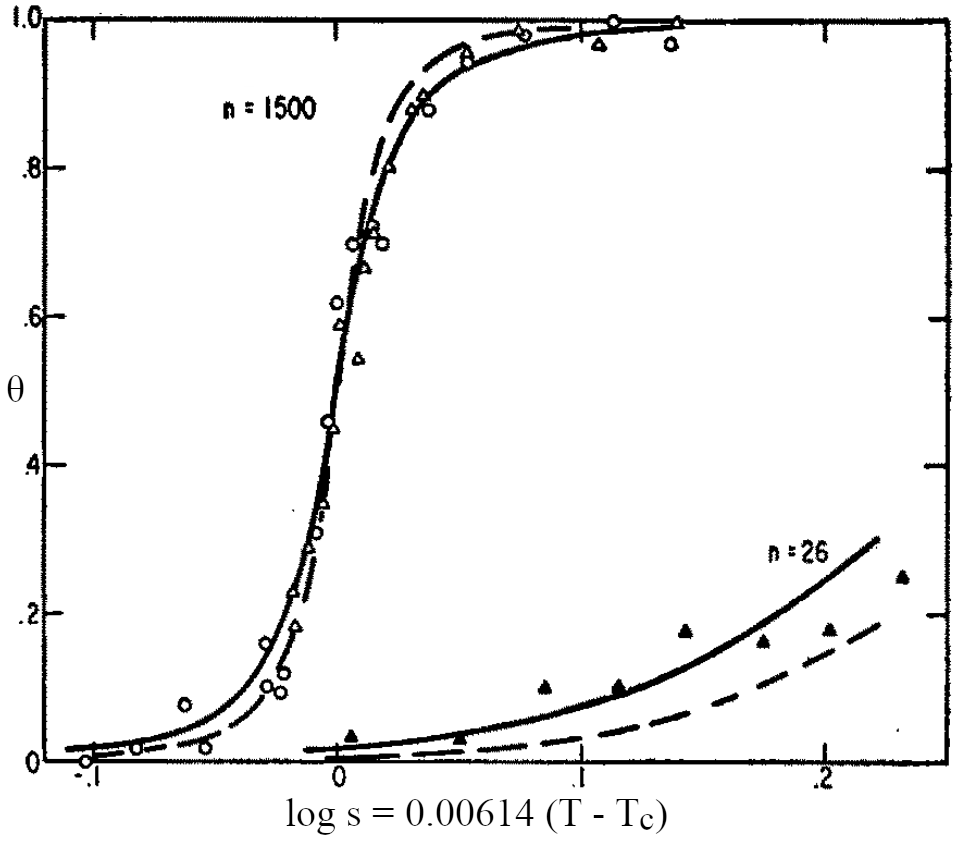

Figure 4:Comparison between experimental data (points) and theoretical fits to Eq. (17) (lines). The number of monomers is and for the top and bottom curves, respectively. is fixed by the relation , where is the temperature at which . Solid and dashed lines correspond to and , respectively. Adapted from Zimm & Bragg (1959).

A comparison between the Zimm-Bragg theory and experiments on poly--benzyl-L-glutamate of different lengths are reported in Figure 4. It is clear that there is a strong -dependence that shows the cooperative nature of the transition. Moreover, in fitting the curves it is assumed that does not depend on , since it has a purely entropic origin. However, note that in this particular experimental system the observed fraction of helical monomers grows with temperature: due to the combination of solvent and peptides used, the parameter here takes into account not only the enthalpic contribution due to the residue-residue interactions, but also the contribution due to solvent-solute interactions.

1.2The HP model¶

Globular proteins exhibit a unique characteristic compared to other isolated polymer molecules: their native structure is unique, meaning that the path formed by the backbone is essentially fixed, bar some small fluctuations. The question arises: what forces, determined by the amino acid sequence, contribute to this remarkable specificity? Of course, as discussed before, a significant constraint is provided by the fact that native structures are compact. However, even for compact chain molecules, many conformations are possible, with the number of maximally compact conformations increasing exponentially with chain length. Among the various physically accessible compact conformations, there are in principle many different interactions that can impact their thermodynamic stability and thus select the native structure. However, it is now well accepted that the interaction type that most contribute to this conformational reduction is hydrophobicity: given a specific amino acid sequence, the number of compact conformations that it can take is greatly reduced by the constraint that residues with hydrophobic side chains should reside inside the globule, while polar and hydrophilic residues should be on the protein’s surface (see e.g. Finkelstein & Ptitsyn (2002) and Dill (1990)).

A simple model that has been used to demonstrate the importance of hydrophobic interactions in selecting the native structure is the Hydrophobic-Polar (HP) protein lattice model. In this model, proteins are abstracted as sequences composed solely of hydrophobic (H) and polar (P) amino acids. In the HP model, the protein sequence is mapped onto a grid, or lattice, which can be two-dimensional (2D) or three-dimensional (3D). Common lattice types used include square lattices for 2D models and cubic lattices for 3D models, but other choices are possible.

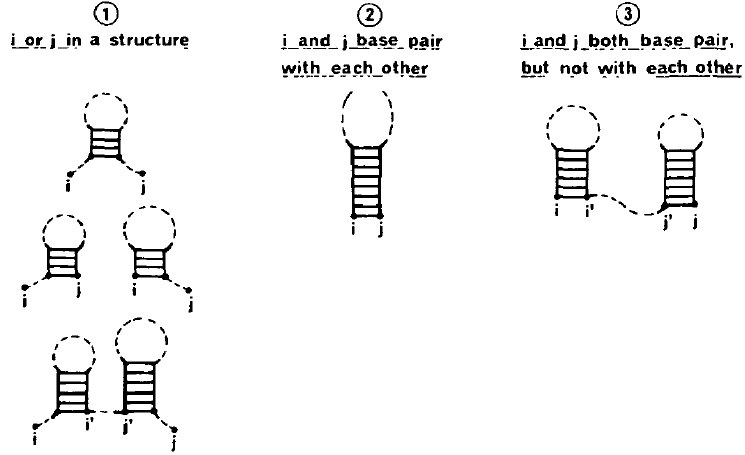

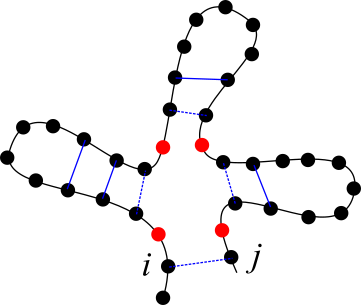

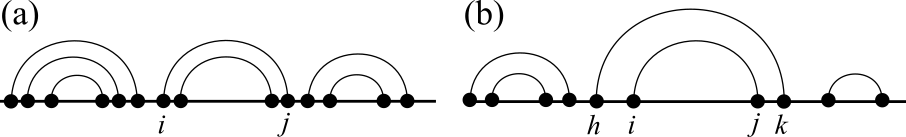

Here I will present the 2D square lattice version of the model[4]. Each of the amino acids of the protein is assigned a type: “H” means hydrophobic, and “P” means polar, so that the sequence of the protein is then the ordered list of amino acids (e.g. “HHPHPPPHHP”). The -th amino acid in the sequence is identified by its index and occupies a point on this grid, and two amino acids cannot occupy the same grid point. Two amino acids and for which are “connected neighbours” and share a backbone edge representing the peptide bond, which on the square lattice can be either horizontal or vertical. By contrast, two amino acids and for which that are adjacent in space but not along the sequence are said to be “topological neighbours”. Every pair of HH topological neighbours form a topological contact that contributes an amount of free energy , while the other contacts give and . In the simplest version of the model . In this case, the total (dimensionless) free energy of a conformation is

where is the number of HH topological contacts. Following Lau & Dill (1989), the lowest-energy (highest-) conformations of a chain with a specific sequence and a length are called “native”.

The concept of topological contacts is also useful to describe the degree of compactness of a conformation, defined as

where is the number of topological contacts, irrespective of the type of neighbouring residues, and is the largest possible number of topological contacts for a chain of size , which is

where is the perimeter of the smallest box that can completely surround the protein. By this definition, (maximally) compact conformations have .

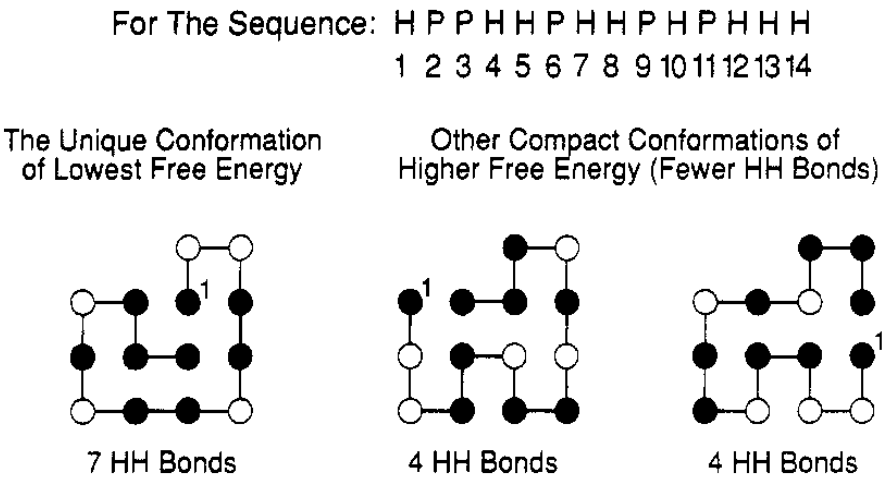

Figure 5:The sequence “HPPHHPHHPHPHHH” has a single conformation with 7 topological contacts (shown on the left). All other conformations, like the two on the right, have fewer hydrophobic contacts. Hydrophobic and polar residues are shown as black and white circles, respectively. Taken from Dill (1990).

As an example, Figure 5 shows the single lowest-energy conformation of a given sequence, together with two other compact conformations of higher free energy. It is rather evident that the native conformation has a core that is richer in hydrophobic residues compared to the other two.

In order to be more quantitative, some useful observables can be introduced. In general, the thermodynamic properties of a chain of length and a given sequence can be computed from the partition function:

where is the number of distinct conformations of an -chain, so that the sum runs over all the conformations. If the length of the chains is not too large[5], it is possible to numerically perform a complete (exhaustive) enumeration of all possible chain conformations, from which any quantity of interest can be computed as an ensemble average, viz.

Since , the partition function can also be written as a sum over conformations of fixed number of HH topological contacts :

where is the maximum number of HH topological contacts and is the number of distinct chain conformations having . Note that by this definition, the native conformations are those for which . We also define as the number of conformations that have HH topological contacts and non-HH topological contacts, which is connected to by

Since partition functions are defined up to a multiplicative constant, we multiply by to obtain[6]

With this definition it is straightforward to take averages over native conformations only. Indeed, if we take the limit, is non-zero only for , and the partition function becomes

We now define some useful average values to characterise the different states. The average compactness over all conformations is

and the average compactness over the native conformations is

where we used the fact that

The average energy is proportional to the average number of HH contacts, which is

Finally, we know already that most of the hydrophobic residues are buried in the core of globular proteins. In the HP model we define the core as the set of residues that are completely surrounded by other residues. Let the number of core residues be , and the number of hydrophobic core residues be , then the degree of hydrophobicity of a given conformation can be estimated as

and its averages over all and native conformations can be computed as

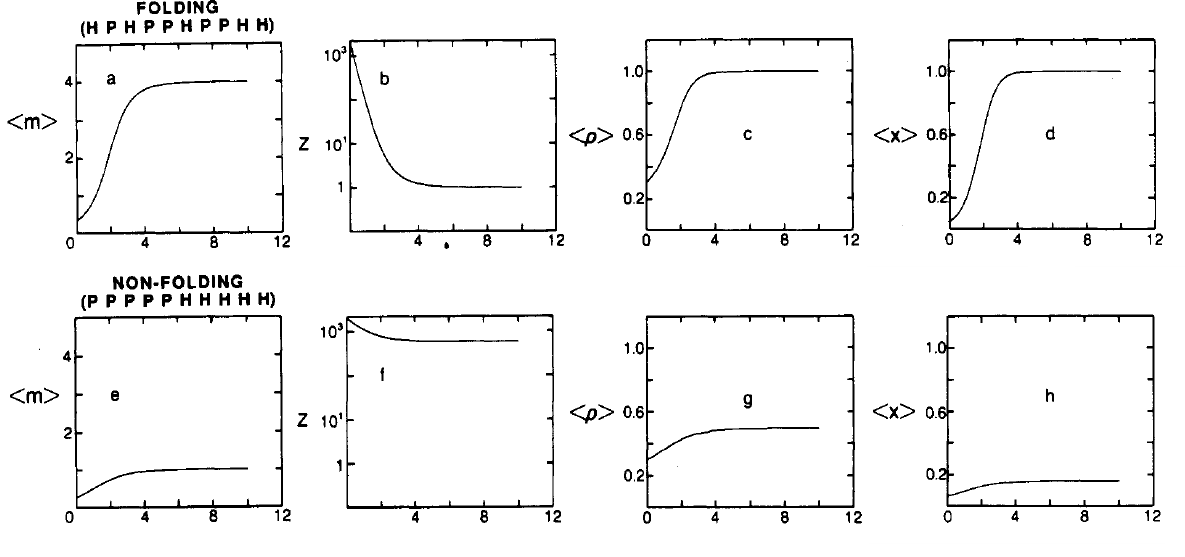

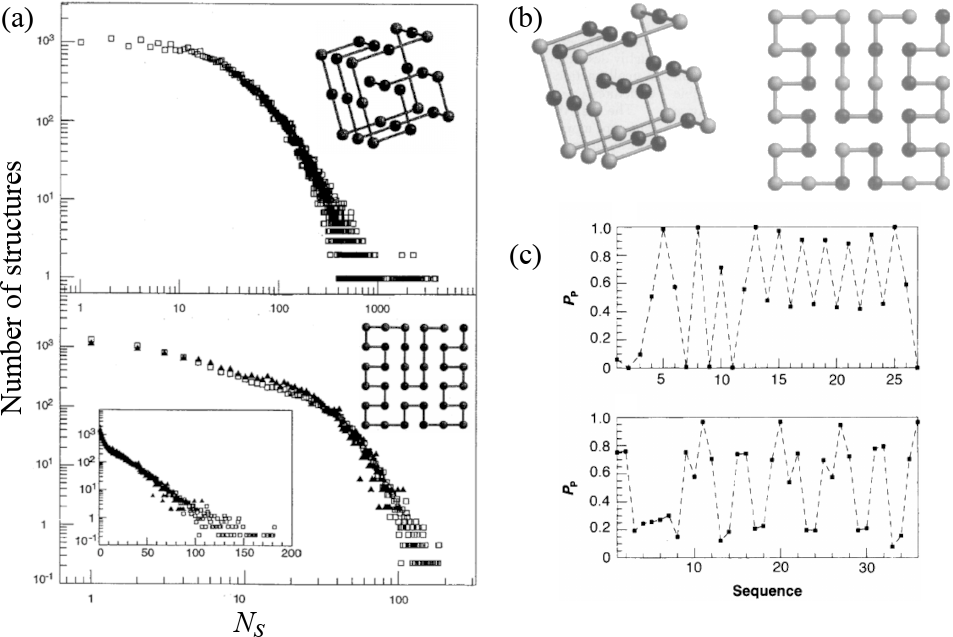

Figure 6:Ensemble averages of folding (top) and non-folding (bottom) sequences. Here is the value of the partition function, which I call . All quantities are plotted as a function of . Adapted from Lau & Dill (1989).

The ensemble averages of these quantities, as well as of the partition function, which is a measure of the number of available conformations, as a function of for two sequences are shown in Figure 6. Looking at the top panels it is clear that if the HH interaction is strong the preferred conformations of the HPHPPHPPHH sequence have low energy, are few (), compact, , and have a purely hydrophobic core, : this is a “folding” sequence.

By contrast, the bottom panels show a sequence that behaves very differently: the low-energy (native) conformations are not compact and large in number. Looking at the sequence itself, PPPPPHHHHH, it is clear that the hydrophilic “tail” does not make it possible to form a conformation with a hydrophobic core, and the number of HH topological contacts itself cannot be too high. These are all clear signs of a “non-folding” sequence.

Note that the quantities reported in Figure 6 are somewhat correlated: folding sequences tend tend to have few (sometimes one) low-energy compact conformations with hydrophobic cores, whereas non-folding sequences tend not to have any of these attributes.

The HP model (or its extensions) can be used to understand some general or even specific aspects of protein folding because of its simplicity. The 2-letter alphabet and the greatly reduced residue conformations due to the underlying lattice makes it possible to fully explore (at the same time) the conformational space (i.e. the set of all possible internal conformations of a molecule) and the sequence space (i.e. the set of all possible sequences of H and P residues). Both spaces have sizes that grow exponentially with , and therefore the maximum length of chains that can be investigated by exhaustive enumeration is somewhat limited, but there are computational techniques that can be used to circumvent this issue.

1.2.1Folding HP sequences¶

If we now use a stricter definition of a “folding” sequence as a sequence that has a single native conformation, we can draw a comparison between lattice folding sequences and real proteins. First of all, how many folding sequences there are?

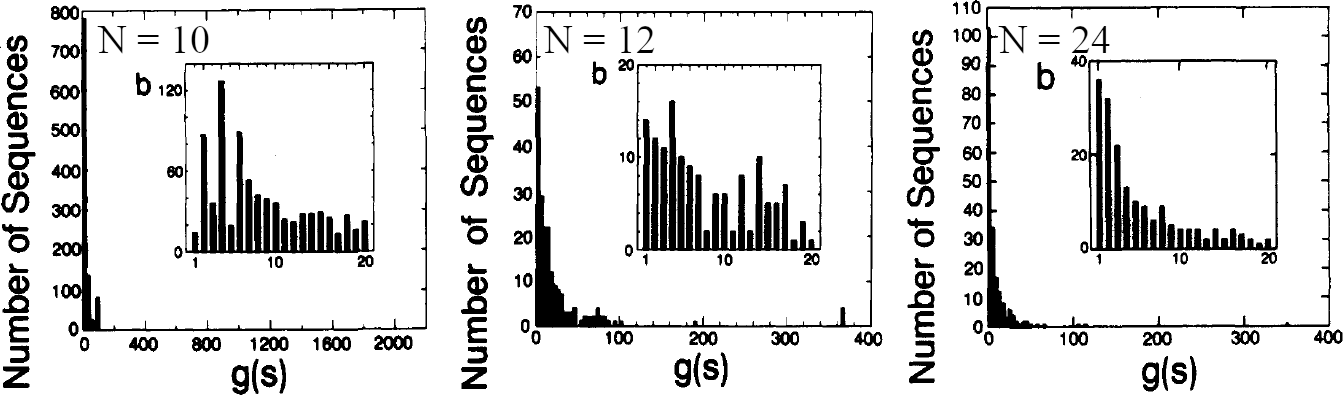

Figure 7:The distribution of the number of sequences having native conformations for chain length and 24. In the figure . Adapted from Lau & Dill (1989).

Figure 7 shows the distributions of the number of native conformations , i.e. how many sequences have the given degeneracy, for different chain lengths. As increases, the distribution becomes more and more peaked on 1: far more sequences have a single lowest-energy conformation rather than, say, 10 or 100. These results suggest that hydrophobicity alone is enough to greatly reduce the number of compact configurations into a small set of folding candidate structures (Dill (1990)), and confirms that the hydrophobic force is a major driving force for protein folding (see e.g. Finkelstein & Ptitsyn (2002)).

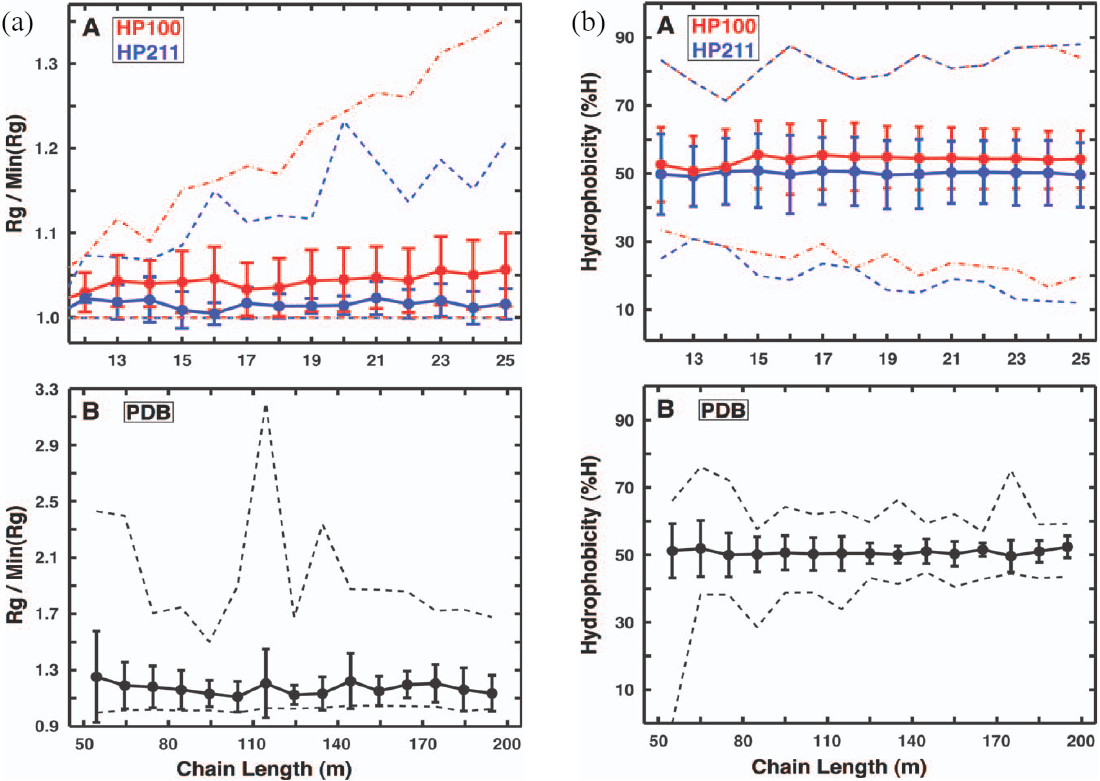

Figure 8:(a) Compactness and (b) hydrophobicity as a function of the chain length for (A) two HP lattice models and (B) real proteins. Dashed lines show the range of values. Adapted from Moreno‐Hernández & Levitt (2012).

But to what extent lattice and real proteins are similar? I will present some results reported in Moreno‐Hernández & Levitt (2012). Therein, an exhaustive enumeration of all conformations of chains of lengths up to 25 has been carried out with two HP models:

In HP100, which is the one described above, only HH contacts contribute to a conformation’s free energy.

In HP211 topological contacts between H and P and P and P contribute -1 (i.e. ) to the free energy, while HH contacts contribute . As a result, compared to the HP100 model, all residues feel an attraction that makes compact conformations more favourable.

Some interesting results pertaining to folding sequences are reported in Figure 8. Figure 8(a) shows, as a proxy for compactness, the normalised radius of gyration , where is the smallest radius of gyration among the structures of a given length, for the lattice and real proteins. For real proteins the authors have considered a non-redundant set of 2401 single-domain proteins from the PDB with lengths between 50 and 200 residues long. By comparing the two lattice models, it is evident that the additional attraction between non-hydrophobic residues enhances the compactness in HP211 more, but the variation in compactness, given by the error bars, is very small in both cases, and the compactness itself does not depend on the chain length. The same behaviour is observed in real proteins.

Figure 8(b) shows the percentage of residues that are hydrophobic in the lattice structures and for the same real proteins used for the radius of gyration. Both lattice and real proteins have an average hydrophobicity of , independent of , and the error bars and total range (dashed lines) are comparable in the two cases (although both are a bit larger for the HP model). Folding sequences in the HP model are “protein-like”.

1.2.2Designable HP conformations¶

Now we focus on compact conformations. How many sequences have a given compact conformation as their native (i.e. lowest-energy) state? Does the answer depend on some properties of the selected conformation itself? In the context of the HP model, some of the first answers were provided here with a HP model with , and , chosen in order to fulfill the following physical constraints, rooted in the analysis of real proteins:

Compact conformations have lower energies compared to non-compact ones.

Hydrophobic residues should have the tendency to be buried in the core ().

Different types of monomers should tend to segregate ().

The output of the computation was to enumerate all the native structures of each possible sequence on a 3D 3x3x3 cube and on 2D 4x4, 5x5, 6x5 and 6x6 boxes. The result of this complete enumeration is the list of all possible sequences that can “design” a given structure, or, in other words, that have that structure as their unique native state. The size of this list, , is a measure of the designability of a given structure.

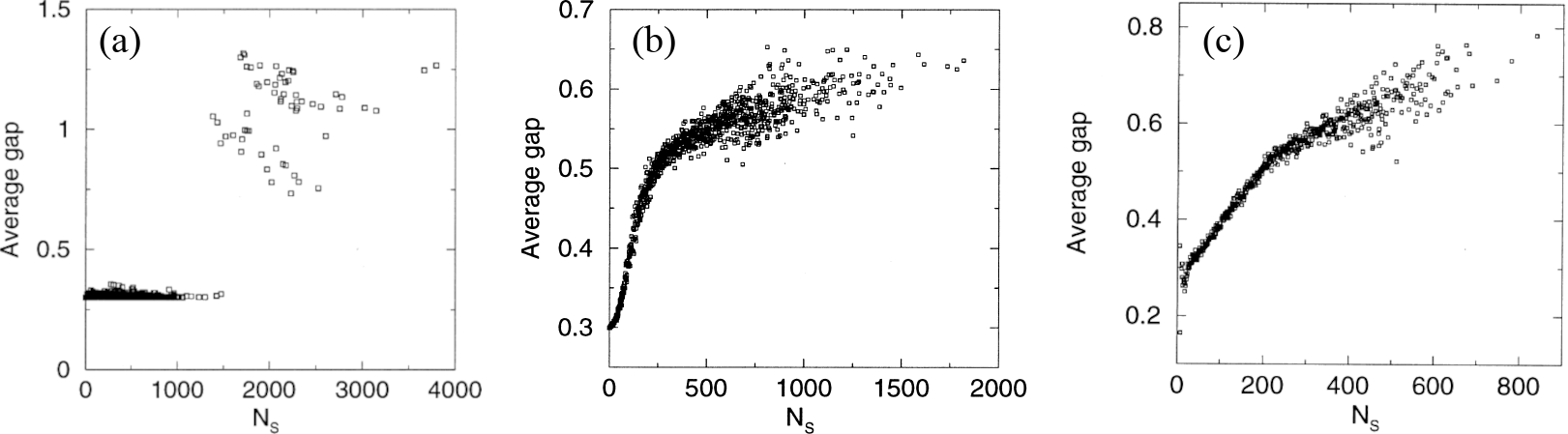

Figure 9:(a) The number of structures with the given for the (top) 3x3x3 cube and (bottom) 6x6 square. In the bottom panel, the number of structures goes below 1 for large , which, given its definition, should not happen. Perhaps the authors normalised the data in some way that, as far as I understand, is not specified in the original paper. (b) The most designable (A) 3D and (B) 2D structures. Hydrophobic and polar residues are coloured in black and grey, respectively. (c) The probability of finding a polar residue as a function of the sequence index for the same two structures. Adapted from Li et al. (1996).

Compact structures differ markedly in terms of their designability: there are structures that can be designed by a large number of sequences, and there are “poor” structures that can be designed by only a few or even no sequences. In fact, for of the conformations there is no sequence that has that structure as its ground state. The majority of the sequences ( out of the 227 total sequences in 3D) have degenerate ground states, i.e. more than one compact conformation of lowest energy, which means that in 3D there exist almost 7 million sequences of length 27 that have a unique compact ground state. Moreover, the number of structures with a given value decreases continuously and monotonically as increases. The data for the 3D and largest 2D cases is shown in Figure 9(a), where the long tails of the distributions, with some structures being the ground states of thousands of sequences, highlight the presence of “highly-designable” compact conformations.

Structures with large exhibit specific motifs (i.e. secondary structures) that small compact structures lack. For instance, the 3D compact structures with the 10 largest values contain parallel running lines packed regularly and eight or nine strands (three amino acids in a row), sensibly more than the average compact structure. Figure 9(b) shows the most designable structures in 3D and in 2D, where it is easy to spot the regularity of the “secondary structures” and, for the 3D structure, also the “strands”.

With the simple lattice model is also possible to directly assess the effect of mutations, which in real proteins is particularly important in the context of homologous sequences (sequences related by a common ancestor). Indeed, focussing on highly designable structures and referring to the different sequences that fold into them as “homologous”, it is possible to observe phenomena that are qualitatively similar to those observed in real proteins. For example, sequences that differ by more than half of their residues can design the same structure (see Figure 20, which will be discussed later, for a real-protein example). Looking at the effect of mutations, the rightmost panel of Figure 9(c) shows that in very designable conformations some residues are highly mutable, whereas others are highly conserved, with the conserved sites being those sites with the smallest or largest number of sides exposed to water.

Figure 10:The average energy gap between the lowest-energy and first excited states of the (a) 3D 3x3 cube and (b) 2D 6x6 HP models, as well as of the (c) 2D 6x6 MJ model. Adapted from Helling et al. (2001)).

Another important feature of proteins that is also reproduced by the model is the large energy gap between the lowest-energy and first excited conformations, which stabilises the native structures from the thermodynamic point of view. In the lattice model, the average energy gap is defined as the minimum energy required to change a native conformation to a different compact structure, averaged over all the sequences that design that native conformation. Figure 10 shows that there is a sudden jump (in 3D) or change of slope (in 2D) at a specific value of . Therefore, the conformations that are highly designable also have large energy gaps, making them highly stable from a thermodynamic point of view.

As shown in the rightmost panel of Figure 10, the same behaviour is also observed when a 20-letter (rather than 2-letter) alphabet is used, with the interactions between the residues being modelled using the MJ interaction matrix, which was derived by analysing the contact frequencies of amino acids from protein crystal structures.

Although the ingredients in the simple lattice model are minimal, lacking many of the factors that contribute to the structure of proteins, the results that have been obtained with it contributed to the understanding that there is a link between designability and thermodynamic stability: “From an evolutionary point of view, highly designable structures are more likely to have been chosen through random selection of sequences in the primordial age, and they are stable against mutations” (Li et al. (1996)).

1.3Sequence alignment¶

Simple models are useful to understand the underlying physics of some particular phenomena. However, how can we understand something very specific, like what is the 3D structure of a particular sequence? The simplest way is to look for similarities: if we already have a list of sequence structure connections, we can try to look whether the new sequence, for which the 3D structure is unknown, is similar, and to what degree, to another for which the 3D structure is already known. This operation is called “sequence alignment” (SA).

Sequence alignment is a fundamental technique in bioinformatics. The primary goal of sequence alignment is to identify regions of similarity that may indicate functional, structural, or evolutionary relationships between the sequences being compared. In the context of proteins, sequence alignment can be useful for reasons that go beyond the 3D structure prediction:

It helps to predict the function of unknown proteins based on their similarity to known proteins.

It aids in understanding evolutionary relationships by identifying conserved sequences among different species, allowing the construction of phylogenetic trees.

It can identify conserved domains that are crucial for the function of proteins.

It helps in identifying potential drug targets by finding unique sequences in pathogens that differ from the host.

It assists in annotating genomes by finding homologous sequences, thus providing insights into gene function and regulation.

Turning a biological problem into an algorithm that can be solved on a computer requires making some assumptions in order to obtain a model with relative simplicity and tractability. In practice, for our sequence alignment model we ignore realistic events that occur with low probability (e.g. duplications) and focus on the following three mechanisms through which sequences vary:

A (point) mutation, or substitution, occurs when some amino acid in a sequence changes to some other amino acid during the course of evolution.

A deletion occurs when an amino acid is deleted from a sequence during the course of evolution.

An insertion occurs when an amino acid is added to a sequence during the course of evolution.

There are many possible sequences of events that could change one genome into another, and we wish to establish an optimality criterion that allows us to pick the “best” series of events describing changes between sequences. We choose to invoke Occam’s razor and select a maximum parsimony method as our optimality criterion[7]: we wish to minimize the number of events used to explain the differences between two sequences. In practice, it is found that point mutations are more likely to occur than insertions and deletions, and certain mutations are more likely than others. We now develop an algorithmic framework where these concepts can be incorporated explicitly by introducing parameters that take into account the “biological cost” of each of these changes.

1.3.1Homology¶

While here my focus is on protein structure, I also want to point out that in bioinformatics one of the key goals of sequence alignment is to identify homologous sequences (e.g., genes) in a genome. Two sequences that are homologous are evolutionarily related, specifically by descent from a common ancestor. The two primary types of homologs are orthologous and paralogous. While they are outside the scope of these notes, I note here that other forms of homology exist (e.g., xenologs, which are genes in different species that have arisen through horizontal gene transfer[8] rather than through vertical inheritance from a common ancestor, and often perform similar functions in their respective organisms despite their distinct evolutionary origins).

Orthologs arise from speciation events, leading to two organisms with a copy of the same gene. For example, when a single species A speciates into two species B and C, there are genes in species B and C that descend from a common gene in species A, and these genes in B and C are orthologous (the genes continue to evolve independent of each other, but still perform the same relative function).

Paralogs arise from duplication events within a species. For example, when a gene duplication occurs in some species A, the species has an original gene B and a gene copy B’, and the genes B and B’ are paralogus.

Generally, orthologous sequences between two species will be more closely related to each other than paralogous sequences. This occurs because orthologous typically (although not always) preserve function over time, whereas paralogous often change over time, for example by specializing a gene’s (sub)function or by evolving a new function. As a result, determining orthologous sequences is generally more important than identifying paralogous sequences when gauging evolutionary relatedness.

1.3.2Definitions¶

Following Kellis (2016), we introduce a simplified version of the alignment problem and make it more complex by adding the required ingredients. First, some definitions:

- Sequence

- In mathematics (and combinatorics) a sequence is a series of characters taken from an alphabet . For what concerns us, DNA molecules are sequences over the alphabet , RNA sequences over the alphabet , and proteins are sequences over the alphabet .

- Substring

- A consecutive part of a sequence. A sequence of length has substrings of length 1, substrings of length 2, , 2 substrings of length . Given the sequence “SUPERCALIFRAGILISTICHESPIRALIDOSO”, “FRAGILI” is a substring, but “SUPERDOSO” is not.

- Subsequence

- A set of characters of a sequence. The characters do not have to be consecutive, but they should be ordered like in the original sequence. Given the sequence “SUPERCALIFRAGILISTICHESPIRALIDOSO”, “FRAGILI” and “SUPERDOSO” are two subsequences, but “SOSUPER” is not. Formally, given a sequence , , with , is a subsequence of if there exists a strictly increasing sequence of indices of such that for each .

- Sequence alignment

- An alignment of two sequences and , defined over the same alphabet , is a table containing either a character from , or the gap symbol “

-”. In the table there is no column in which both characters are “-”, and the non-gap characters in the first line give back sequence , while the non-gap characters in the second line give back sequence .

1.3.3Problem formulation¶

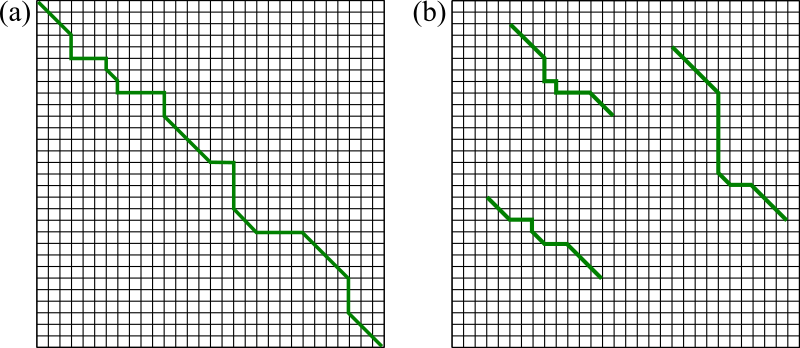

We warm up by considering the following simple problem: given two sequences, and , what is their longest common substring? We take the two following DNA fragments as examples: ACGTCATCA and TAGTGTCA. The problem can be solved by aligning the first characters of the two sequences, and then shifting one, say , by an integer amount . By computing the number of matching characters for every we can find the optimal alignment, which in this case is given by :

--ACGTCATCA

--xx||||---

TAGTGTCA---The first and third rows of the diagram above describe the alignment itself, as introduced earlier, showing that gaps are characters that align to a space, while the central row shows the character matches: here x and | stand for mismatches and matches, respectively. In this example, the longest common substring is GTCA. The algorithm I just described has a complexity of , where is the length of the shortest sequence.

A more complicated problem is to find the longest common subsequence (LCS) between two sequences. In the language we are introducing, this means allowing internal gaps in the alignment. The LCS between the and sequences defined earlier is

-ACGTCATCA

-|-||x-|||

TA-GTG-TCAIn this case the LCS is AGTTCA, which is longer than the longest common substring.

The LCS problem as formulated here is a particular case of the full sequence-alignment problem. In order to generalise the problem, we introduce a cost function that makes it possible to recast it in terms of an optimisation problem. Given two sequences and , we define as the “biological cost” of turning into . For the case just considered, I implicitly used as a cost function the Levenshtein (or edit) distance, which is defined as the minimum number of single-character edits required to change one string into another. In other words, the problem has been formulated by implicitly considering that all possible operations (mutations, insertions and deletions) are equally likely. In a more generalised formulation, each edit operation is associated to a specific cost (penalty) that should reflect its biological occurrence, as discussed later.

What about gaps? In general, the cost of creating a gap depends on many variables. There are varying degrees of approximations that can be taken in order to simplify the problem. At the zero-th order each gap can be assumed to have a fixed cost, as we implicitly did above. This is called the “linear gap penalty”. An improvement can be done by considering that, biologically, the cost of creating a gap is more expensive than the cost of extending an already created gap. This is taken into account by the “affine gap penalty” method, whereby there is a large initial cost for opening a gap, and then a small incremental cost for each gap extension. There are other (more complicated and more context-specific) methods that can be considered and that we will not analyse further here, such as the general gap penalty, which allows for any cost function, and the frame-aware gap penalty, which is applied to DNA sequences and tailors the cost function to take into account disruptions to the coding frame[9].

1.3.4The Needleman-Wunsch algorithm¶

Once we allow for gaps, the enumeration of all possible alignments (as done for the longest common substring method) becomes unfeasible. Indeed, the number of non-boring alignments, i.e. alignments where gaps are always paired with characters, in a sequence of size containing gaps (where ) can be estimated as

where we used the second-order Stirling’s approximation, . Note that this number grows very fast: for it is already larger than 1017. Considering that we also have to compute the score of each alignment, it is clear that the problem is untractacle with a brute-force method. Here is where dynamic programming enters the field.

Suppose we have an optimal alignment for two sequences , of length , and , of length , in which matches . The alignment can be conceptually split into three parts:

Left subalignment: The alignment of the subsequences

and . Middle match/mismatch: The alignment of

with (this could be a match or a mismatch). Right subalignment: The alignment of the subsequences

and .

The overall alignment score is the sum of the scores from these three parts: the score of the left subalignment, the score for aligning

Let be the score of the optimal alignment of and . Since and , the matrix storing the solutions (i.e. optimal scores) of the subproblems has a size of .

We can compute the optimal solution for a subproblem by making a locally optimal choice based on the results from the smaller subproblems. Thus, we need to establish a recursive function that shows how the solution to a given problem depends on its subproblems and can be used to fill up the matrix . At each iteration we consider the four possibilities (insert, delete, substitute, match), and evaluate each of them based on the results we have computed for smaller subproblems.

We start by considering the linear gap penalty model, and define , with , as the cost of a gap. We are now equipped to set the values of the elements of the matrix. Let’s consider the first row: the value of is the cost of aligning a sequence of length 0 (taken from ) to a sequence of length (taken from ), which can be obtained only by adding gaps, yielding . Likewise, for the first column we have . Then, we traverse the matrix element by element. Let’s consider a generic element : this is the cost of aligning the first characters of to the first characters of . There are three ways we can obtain this alignment:

Align to a gap (i.e. a gap is added to ): the total cost is ;

Align to a gap (i.e. a gap is added to ): the total cost is ;

and are matched: the total cost is , where is the cost of (mis)matching and .

Leveraging the cut-and-paste argument sketched above, the optimal cost is given by the largest of the three values. Formally,

This is a recursive relation: computing the value of any requires the knowledge of the values of its left, top, and top-left neighbours. Therefore, the fill-in phase amounts to traversing the table in row or column major order, or even diagonally from the top left cell to the bottom right cell. After traversing the matrix, the optimal score for the alignment is given by the bottom-right element, . In order to obtain the actual alignment we have to traceback through the choices made during the fill-in phase. It is helpful to maintain a pointer for each cell while filling up the table that shows which choice was made to get the score for that cell. Then the pointers can be followed backwards to reconstruct the optimal alignment.

The complexity analysis of this algorithm is straightforward. Each update takes time, and since there are elements in the matrix , the total running time is . Similarly, the total storage space is . For the more general case where the update rule is more complicated, the running time may be more expensive. For instance, if the update rule requires testing all sizes of gaps (e.g. the cost of a gap is not linear), then the running time would be ).

1.3.5Local alignment: the Smith-Waterman algorithm¶

The Needleman-Wunsch algorithm finds the best possible alignment across the entire length of two sequences. It tries to align every character from the start to the end of the sequences, which means both sequences are considered in their entirety. This is called “global alignment”, and it is most useful when the sequences being compared are of similar length and are expected to be homologous across their entire length.

Local alignment, on the other hand, focuses on finding the best alignment within a subset of the sequences. It identifies regions of similarity between the two sequences and aligns only those regions, ignoring the parts of the sequences that do not match well. Local alignment is particularly useful when comparing sequences that may only share a segment of similarity, such as when comparing domains within proteins, detecting conserved motifs, or identifying homologous regions in sequences that may not be overall similar[10], which is why it is very useful for the prediction of the 3D structure of proteins (or protein subdomains).

The most used method for local alignment is the Smith-Waterman algorithm, which is a modification of the Needleman-Wunsch algorithm. The key difference between the two lies in how the scoring matrices are constructed and scored. In Needleman-Wunsch, every cell in the matrix is filled to reflect the best global alignment, with the final alignment score found in the bottom-right corner of the matrix. Smith-Waterman, on the other hand, sets any negative scores to zero, which allows the algorithm to “reset” when the alignment quality dips. The highest score in the matrix indicates the end of the best local alignment, which is then traced back to a zero to identify the optimal aligned subsequence. This approach ensures that only the most relevant, highest-scoring local alignments are highlighted. Here is how the Smith-Waterman algorithm looks like in practice:

Initialisation: since a local alignment can start anywhere, the first row and column in the matrix are set to zeros, i.e. , .

Iteration : this step is modified so that the score is never allowed to become negative but it is reset to zero. This is done by slightly modifying Eq. (37) as follows:

Trace-back: starts from the position of the maximal number in the table and proceeds until a zero is encountered.

Figure 12:Example trace-back solutions generated by (a) global and (b) local alignment methods.

A visual comparison between the tables generated by global and local alignment algorithms is shown in Figure 12.

1.3.6Affine gap penalty¶

For both the global and local alignment algorithms introduced we have used a linear gap penalty: the cost of adding a gap is constant, regardless of the nature of the aligned character, or of the length of the gap. From the biological point of view, this means that an indel of any length is considered as the result of independent insertions or deletions. However, in reality long indels can form in single evolutionary steps, and in these cases the linear gap model overestimates their cost. To overcome this issue, more complex gap penalty functions have been introduced. As mentioned before, a generic gap penalty function would result in an algorithmic complexity worse than (where for simplicity I’m considering two sequences of the same length). Let’s see why. Any gap penalty function can be implemented in the Needleman-Wunsch or Smith-Waterman algorithms by changing the recursive rule, which can be done trivially for element by evaluating terms such as and , where is the cost of a gap of size . However, this means that the update of every cell of the dynamic programming matrix would take instead of , bringing the algorithmic complexity up to (for sequences).

However, the computational cost can be mitigated by using particular gap penalty functions. Here I will present the most common variant, which is known as the affine gap penalty. In this model, the cost of a gap of size is

where and are the opening and extension penalties, respectively, and . To incorporate affine-gap penalties into the Needleman-Wunsch or Smith-Waterman algorithms in an efficient manner, the primary adjustment involves tracking whether consecutive gaps occur in the alignment. This requires the alignment process to be split into three distinct cases: insertions, deletions, and matches/mismatches. Instead of using a single dynamic programming table as in the linear-gap penalty approach, three separate tables are used: for insertions, for deletions, and for the overall score.

In this setup, each entry in the table, denoted by , stores the best alignment score when the last column includes an insertion (i.e. a gap in the first sequence), and each entry in the table, , captures the best score for alignments ending in a deletion (i.e. a gap in the second sequence). As before, records the overall score. The recursive update rules of the three tables are

Note that with this algorithm each cell update is , and therefore the overall complexity remains the same ( or for same-length sequences).

1.3.7Substitution matrices¶

How is the substitution matrix determined? One possibility is to leverage what we know about the biological processes that underlie mutations. For instance, in DNA the biological reasoning behind the scoring decision can be linked to the probabilities of bases being transcribed incorrectly during polymerization. We already know that of the four nucleotide bases, A and G are purines, while C and T are pyrimidines. Thus, DNA polymerase is much more likely to confuse two purines or two pyrimidines since they are similar in structure. As a result, a simple improvement over the uniform cost function used above is the following scoring matrix for matches and mismatches:

| A | G | T | C | |

|---|---|---|---|---|

| A | +1 | -0.5 | -1 | -1 |

| G | -0.5 | +1 | -1 | -1 |

| T | -1 | -1 | +1 | -0.5 |

| C | -1 | -1 | -0.5 | +1 |

Here a mismatch between like-nucleotides (e.g. A and G) is less expensive than one between unlike nucleotides (e.g. T and C).

However, we can be more quantitative by taking a probabilistic approach. Here I will describe how the widely-used BLOSUM matrices are built (see also the original paper). We assume that the alignment score reflects the probability that two similar sequences are homologous. Thus, given two sequences and of the same length[11] for which we have an ungapped alingment, we define two hypotheses:

The alignment between the two sequences is due to chance and the sequences are, in fact, unrelated.

The alignment is due to common ancestry and the sequences are actually related.

Under the rather strict assumption (“biologically dubious, but mathematically convenient”, as aptly put in Eddy (2004)) that pairs of aligned residues are statistically independent of each other, so that the likelihoods associated to the two hypotheses, and , can be expressed as products of probabilities, viz.

where is the likelihood that residues and are aligned because correlated, while the product is the likelihood that the two residues are there by chance: their occurrence is unrelated and therefore the likelihood factorises in two terms that account for the average probability of observing those two residues in any protein.

In order to compare two hypotheses, a good score is given by the logarithm of the ratio of their likelihoods (the so-called log-odds score). Therefore, for our case the alignment score is

so that, thanks to the properties of logarithms and probabilities, the overall alignment score is the sum of the log-scores of the individual residue pairs. Considering 20 amino acids, there are 400 such log-scores, which form a 20x20 substitution (score) matrix. Each entry of the matrix takes the form

where is an overall scaling factor that is used to obtain values that can be rounded off to integers. Note that the previous definition can be formally substantiated (see e.g. Karlin & Altschul (1990)).

If (and therefore is positive), the substitution , and therefore their alignment in homologous sequences, occurs more frequently than would be expected by chance, suggesting that this substitution is favored in evolution. These substitutions are called “conservative”. As noted in Eddy (2004), this definition is purely statistical and has nothing directly to do with amino acid structure or biochemistry. Likewise, substitutions with , and therefore negative values of , are termed “nonconservative”.

The procedure applied to compute the and values starts with a collection of protein sequences.

The sequences are grouped into families based on their evolutionary relatedness. A common source for these families is the BLOCKS database, which contains aligned, ungapped regions of proteins that are highly conserved across members of the family.

Within these protein families, highly conserved regions (blocks) are identified. These regions are short segments of amino acid sequences that are believed to be important for the protein’s function and are conserved across different species.

Once blocks are identified, the sequences within each block are clustered, choosing a threshold value : two sequences that share more than this percentage of identical amino acids identity are clustered together, and only one representative from each cluster is used to avoid over-representation of very similar sequences.

Within each block, the actual counts of amino acid pairs are made.

The background frequencies are estimated by computing the overall frequency of each amino acid in the sequences, not considering any substitutions.

To evaluate the terms, for each position in the aligned block, we count how many times one amino acid (say, Alanine) is substituted by another amino acid (say, Glycine) in the aligned positions across the different sequences. For instance, if there is an alignment of 5 sequences, for each single position we make comparisons.

Of course, the final scores depend on : if is large, protein blocks that are still rather similar will be considered belonging to different clusters, and therefore compared to each other to derive the scores; the resulting matrix will be more sensitive in identifying closely related sequences but less effective for more distantly related sequences (and vice versa for small values of ). Common values for are , , and , which yield the matrices BLOSUM45, BLOSUM62, and BLOSUM80, with BLOSUM62 being the de-facto standard (and default) one.

Figure 13:The BLOSUM62 matrix. The amino acids are grouped and coloured based on Margaret Dayhoff’s classification. Non-negative values are highlighted. Credits to Ppgardne via Wikimedia Commons.

The BLOSUM62 matrix is shown in Figure 13. First of all, note that substitution matrices are symmetric, because the biological process of amino acid substitution is symmetric: as empirically observed, there is no preferred direction when one amino acid replaces another. Secondly, it is evident that conservative substitutions tend to be those between amino acids that are similar, as made evident by grouping the amino acids according to the classification introduced by Margaret Dayhoff.

Why the values of the matrix diagonal, representing the scores of “substituting” one amino acid with itself, are all different?

The rarer the amino acid is, the more surprising it would be to see two of them align together by chance. In the homologous alignment data that BLOSUM62 was trained on, leucine/leucine (L/L) pairs were in fact more common than tryptophan/tryptophan (W/W) pairs (, ), but tryptophan is a much rarer amino acid (, ). Run those numbers (with BLOSUM62’s original ) and you get +3.8 for L/L and +10.5 for W/W, which were rounded to +4 and +11.

1.3.8BLAST¶

The sheer volume of sequence data generated by modern-day high-throughput sequencing technologies presents a significant challenge. Databases now contain millions of nucleotide and protein sequences, each potentially spanning thousands of characters. When comparing a new sequence against these massive databases, traditional pairwise alignment methods like the ones we just discussed become computationally demanding. Indeed, performing a global or even local alignment between a query sequence and every sequence in a large database can require huge computational resources and time, especially as the number of sequences and their lengths continue to grow exponentially.

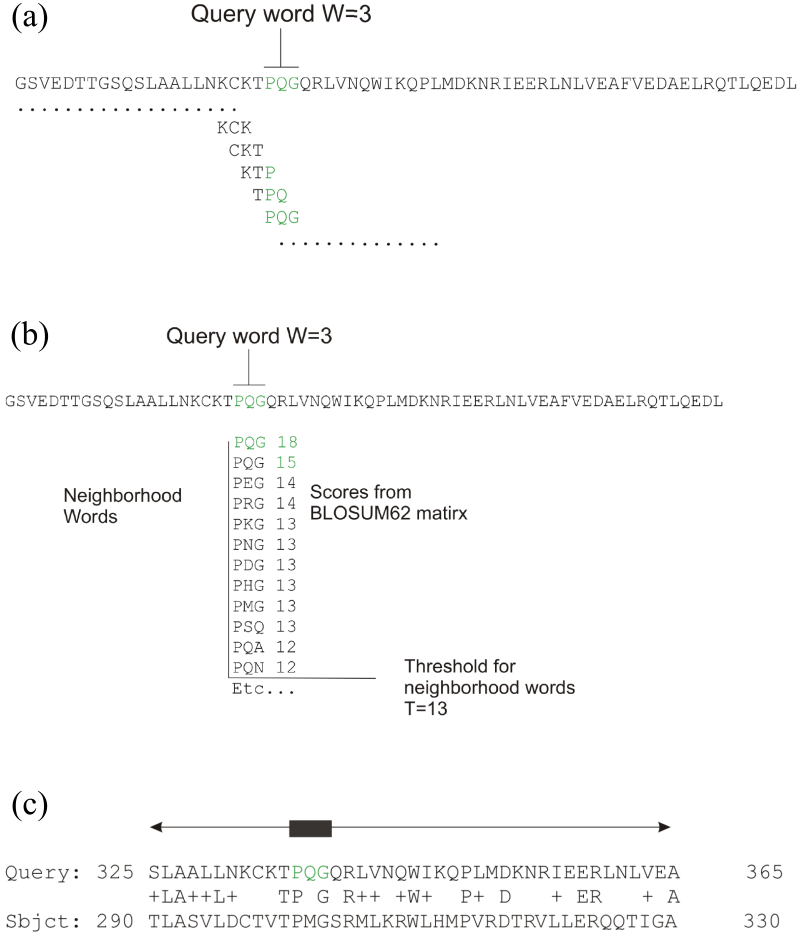

The need for a more efficient method resulted in the most cited paper of the 1990s, where BLAST (Basic Local Alignment Search Tool), now a critical tool in bioinformatics, was introduced. BLAST operates by finding regions of local similarity between sequences, which is more computationally feasible and faster than aligning entire sequences globally. The algorithm requires a query sequence and a target database. First of all, the query sequence is broken down into smaller fragments (called words or -mers). For each -mer, a list of similar words is generated, and only those with a similarity measure that is higher than a threshold are retained add added to the final -mer list. The similarity is evaluated by using a substitution matrix (BLOSUM62 is a common choice). Then, the target database is searched for matches to these words, extending the matches in both directions to find the best local alignments. BLAST assigns scores to these alignments based on the degree of similarity via a Smith-Waterman algorithm, with higher scores indicating closer matches.

Figure 14:The three most important steps of the BLAST algorithm. (a) Generate the initial -mers (). (b) Make the list of BLAST words using a threshold . (c) Extend the matches into both directions until the score drops below a predetermined threshold.

The BLAST algorithm can be broken down into the following steps[12]

Optional: remove low-complexity region or sequence repeats in the query sequence. Here “Low-complexity region” means a region of a sequence composed of few kinds of elements. These regions might give high scores that confuse the program to find the actual significant sequences in the database, so they should be filtered out. The regions will be marked with an X (protein sequences) or N (nucleic acid sequences) and then be ignored by the BLAST program. To filter out the low-complexity regions, the SEG program is used for protein sequences and the program DUST is used for DNA sequences. On the other hand, the program XNU is used to mask off the tandem repeats in protein sequences.

Make a -letter word list of the query sequence. The words of length (-mers) in the query sequence are listed “sequentially”, until the last letter of the query sequence is included. The method is illustrated in Figure 14(a). is usually 3 and 11 for a protein and a DNA sequence, respectively.

List the possible matching words. A substitution matrix (e.g. BLOSUM62) is used to match the words listed in step 2 with all the -mers. For example, the score obtained by comparing PQG with PEG and PQA is respectively 15 and 12 with the BLOSUM62 matrix[13]. After that, a neighborhood word score threshold is used to reduce the number of possible matching words. The words whose scores are greater than the threshold T will remain in the possible matching words list, while those with lower scores will be discarded. For example, if PEG is kept, but PQA is abandoned.

Organize the remaining high-scoring words into an efficient search tree. This allows the program to rapidly compare the high-scoring words to the database sequences.

Repeat step 3 to 4 for each -mer in the query sequence.

Scan the database sequences for exact matches with the remaining high-scoring words. The BLAST program scans the database sequences for the remaining high-scoring words, such as PEG. If an exact match is found, this match is used to seed a possible ungapped alignment between the query and database sequences.

Extend the exact matches to high-scoring segment pair (HSP). The original version of BLAST stretches a longer alignment between the query and the database sequence in the left and right directions, from the position where the exact match occurred. The extension does not stop until the accumulated total score of the HSP begins to decrease. To save more time, a newer version of BLAST, called BLAST2 or gapped BLAST, has been developed. BLAST2 adopts a lower neighborhood word score threshold to maintain the same level of sensitivity for detecting sequence similarity. Therefore, the list of possible matching words list in step 3 becomes longer. Next, exact matched regions that are within distance from each other on the same diagonal are joined as a longer new region. Finally, the new regions are then extended by the same method as in the original version of BLAST, and the scores for each HSP of the extended regions are created by using a substitution matrix as before.

List all of the HSPs in the database whose score is high enough to be considered. All the HSPs whose scores are greater than the empirically determined cutoff score are listed. By examining the distribution of the alignment scores modeled by comparing random sequences, a cutoff score can be determined such that its value is large enough to guarantee the significance of the remaining HSPs.

Evaluate the significance of the HSP score. Karlin & Altschul (1990) showed that the distribution of Smith-Waterman local alignment scores between two random sequences is

where and are the length of the query and database sequences[14], and the statistical parameters and depend upon the substitution matrix, gap penalties, and sequence composition (the letter frequencies) and are estimated by fitting the distribution of the ungapped local alignment scores of the query sequence and of a lot of (globally or locally) shuffled versions of a database sequence. Note that the validity of this distribution, known as the Gumbel extreme value distribution (EVD), has not been proven for local alignments containing gaps yet, but there is strong evidence that it works also for those cases. The expect score of a database match is the number of times that an unrelated database sequence would obtain a score higher than by chance. The expectation obtained in a search for a database of total length

This expectation or expect value (often called -score, -value or -value) assessing the significance of the HSP score for ungapped local alignment is reported in the BLAST results. The relation above is different if individual HSPs are combined, such as when producing gapped alignments (described below), due to the variation of the statistical parameters.

Make two or more HSP regions into a longer alignment. Sometimes, two or more HSP regions in one database sequence can be made into a longer alignment. This provides additional evidence of the relation between the query and database sequence. There are two methods, the Poisson method and the sum-of-scores method, to compare the significance of the newly combined HSP regions. Suppose that there are two combined HSP regions with the pairs of scores and , respectively. The Poisson method gives more significance to the set with the maximal lower score . However, the sum-of-scores method prefers the first set, because is greater than . The original BLAST uses the Poisson method; BLAST2 uses the sum-of scores method.

Show the gapped Smith-Waterman local alignments of the query and each of the matched database sequences. The original BLAST algorithm only generates ungapped alignments including the initially found HSPs individually, even when there is more than one HSP found in one database sequence. By contrast, BLAST2 produces a single alignment with gaps that can include all of the initially found HSP regions. Note that the computation of the score and its corresponding -value involves use of adequate gap penalties.

The -value is the single most important parameter to rate the quality of the alignments reported by BLAST. For instance, if for a particular alignment with score , it means that there are 10 alignments with score that can happen by chance between any query sequence and the database used for the search. Therefore, this particular alignment is most likely not very significant. By contrast, values much smaller than 1 (e.g 10-3 or even smaller) are likely to signal that the sequences are homologous. On most webserver, it is possible to filter out all matches that have an -value larger than some threshold. For instance, on the NCBI webserver, the “expect threshold” defaults to 0.05.

Note that the sequence database used to search for matches is preprocessed first, which increases further the overall computational efficiency. With BLAST, the algorithmic complexity of searching the database for a sequence of length is only . An online webserver[15] to run BLAST can be found here.

1.3.9Multiple sequence alignment¶

Pairwise sequence alignment suffers from some issues that are intrinsic to it, and becomes glaring when trying to align sequences that are distantly related. In particular, all pairwise methods depend in some way or another on a number of parameters (scoring matrix, gap penalties, etc.), and it is hard to tell what is the “best” alignment if the method finds multiple alignments with the same score. Moreover, a pairwise alignment is not necessarily informative about the evolutionary relationship (and therefore about possibly conserved amino acids or whole motifs) of the sequences that are compared.

In order to overcome these issues, a number of multiple sequence alignment (MSA) methods have been developed. MSA extends the concept of pairwise protein alignment to simultaneously align three or more sequences, providing a broader view of evolutionary relationships, structural conservation, and functional regions among a group of proteins. Aligning multiple sequences make it possible to identify conserved amino acids that may be critical for protein function or stability and therefore are biologically significant. This, in turn, can help to predict structural features, functional motifs, and evolutionary patterns across species, providing a fundamental tool to build phylogenetic trees or inform the classification of proteins into families.

In principle, MSA can be carried out with the dynamic programming algorithms we already introduced. For instance, the recursive rule of the Needleman-Wunsch algorithm for globally aligning three sequences , , and , which extends Eq. (37), is

where the dynamic programming “table” is now three-dimensional, and is the cost function of aligning , , and , which can be either residues or gaps. It is straightforward to see that the dimensionality of the table grows linearly with the number of sequences , and therefore the algorithmic complexity is . The exponential dependence on makes this approach practically unfeasible when working with real-world examples.

Unfortunately, better-performing exact methods, i.e. methods that provide the global optimal solution by construction, do not exist (yet). As a result, we have to rely on heuristic methods, which only find local minima. One commonly used approach for multiple sequence alignment is called progressive multiple alignment. Here the prerequisite is that we need to know the evolutionary tree, often called the guide tree, that connects the sequences we wish to align, which is usually built by using some low-resolution similarity measure, often based on (global) pairwise alignment. Then, we start by aligning the two most closely related sequences in a pairwise fashion, creating what is known as the seed alignment. Next, we align the third closest sequence to this seed, replacing the previous alignment with the new one. This process continues, sequentially adding and aligning each sequence based on its proximity in the tree, until we reach the final alignment. Note that this is done using a “once a gap, always a gap” rule: gaps in an alignment are not modified during subsequence alignments. These methods have a computational complexity of , which makes it possible to align thousands of sequences.

Figure 15:Representation of a protein multiple sequence alignment produced with ClustalW (which has been superseded by Clustal Omega). The sequences are instances of the acidic ribosomal protein P0 homolog (L10E) encoded by the Rplp0 gene from multiple organisms. The protein sequences were obtained from SwissProt searching with the gene name. Only the first 90 positions of the alignment are displayed. The colours represent the amino acid conservation according to the properties and distribution of amino acid frequencies in each column. Note the two completely conserved residues arginine (R) and lysine (K) marked with an asterisk at the top of the alignment. Credits to Miguel Andrade via Wikipedia Commons.

One of the most commonly used tools to perform MSAs is Clustal Omega, which is a command-line tool, but it is also available as a webserver. Clustal Omega builds the guide tree using an efficient algorithm (adapted from Blackshields et al. (2010)) which has an algorithmic complexity of , making it possible to generate multiple alignments of hundreds of thousands sequences. An example of MSA is shown in Figure 15.

1.4Threading¶

If pair alignment tools find that a given query sequence is found to share more than 30% of its sequence with another, then it is common to think that a reasonable model for that sequence can be built. By contrast, an alignment yielding a similarity of 20% - 25% could be purely coincidental. In reality, things are more complicated, as it has been shown that proteins with rather high sequence identity could be very different from a structural point of view (Brenner et al. (1998)). This and other results show that a single quantity, such as sequence identity, is not enough to determine the 3D similarity between two proteins, and more numbers (such as the length of the chain or of the well-aligned regions) are required to build reliable models. For instance, it makes sense that for a short 50-residue protein a 40% sequence identity would be required to generate a good match, while 25% may be enough for 250 residues. However, these numbers have a purely statistical value.

A better approach is to go beyond pairwise alignments by using sequence database searching programs such as BLAST which, as we have seen, provide E-values or similar quantities that estimate the reliability of a sequence match by looking at it in the context of the whole library of sequence scores. In addition, more sophisticated BLAST versions (such as PSI-BLAST) make it possible to obtain good matches with less than 20% sequence identity.

Thanks to these tools, and to the ever-growing number of sequences stored in databases, “simple” database searches are often enough to build a model of the query sequence out of a reliable homolog of known structure. However, if no matches are found, or if an independent confirmation is required, we need methods that do not rely on sequence homology. In this case we recast the problem of protein structure prediction as a problem of choice of the 3D structure best fitting the given sequence among many other possible folds. However, what can be the source of “possible” structures?

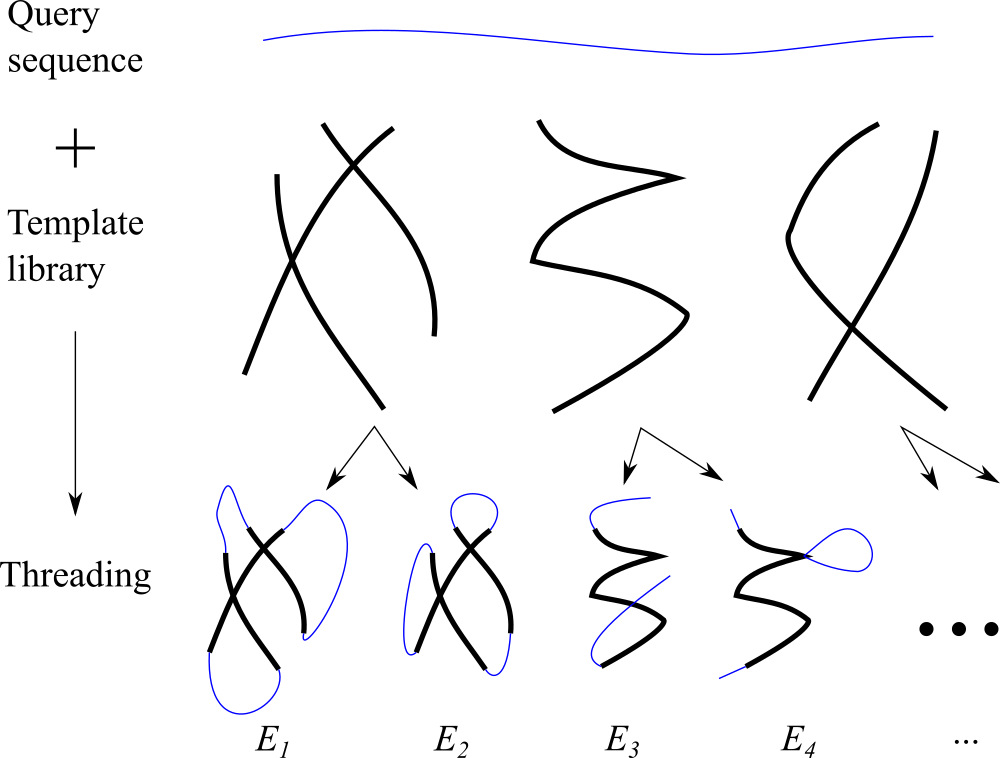

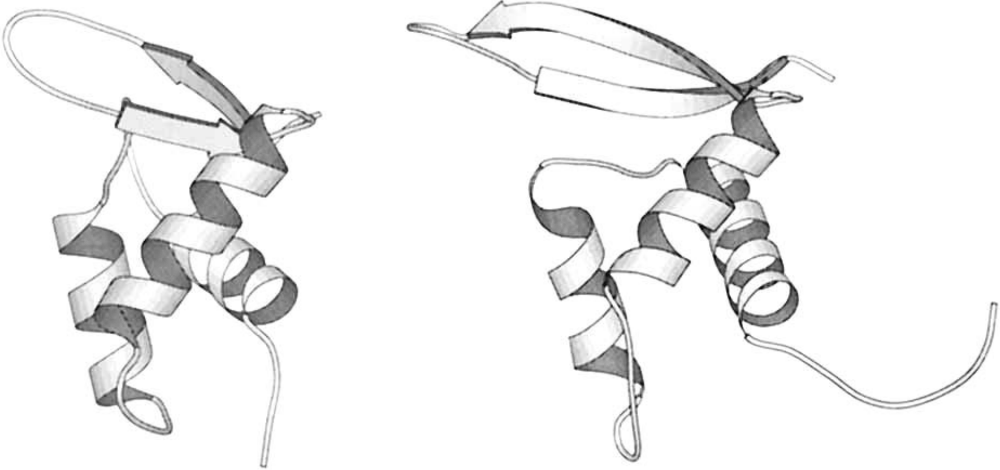

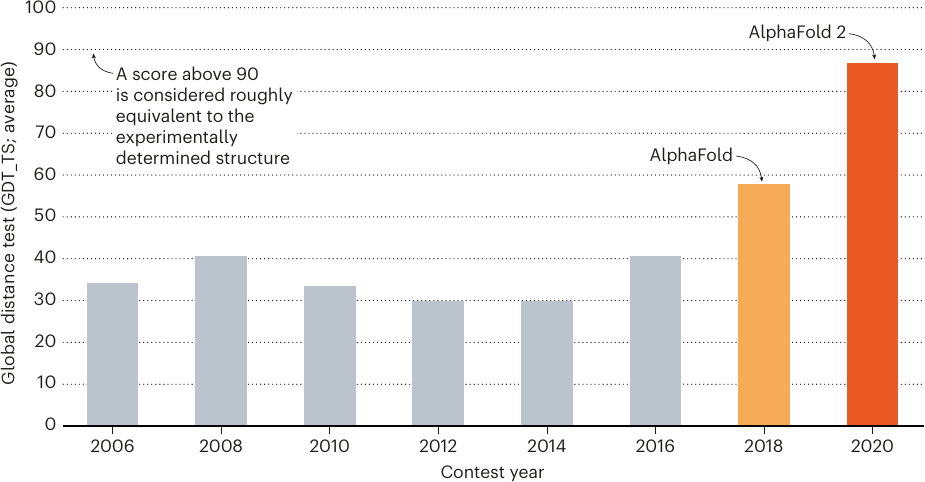

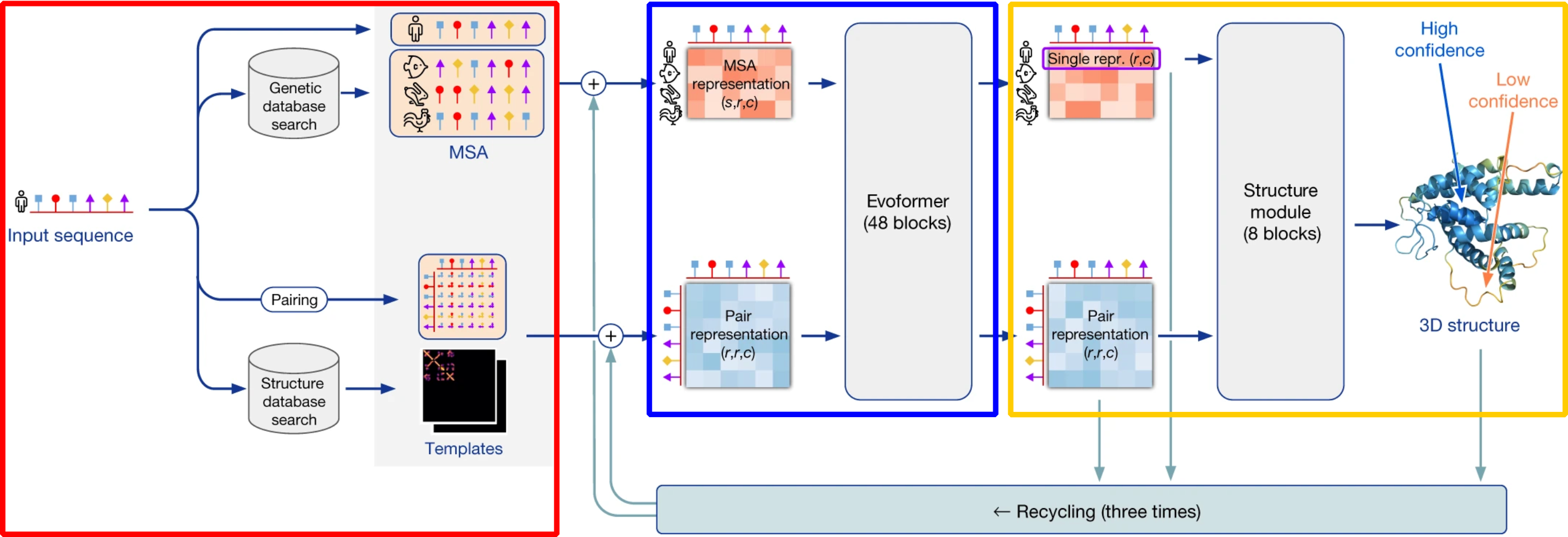

While an a priori classification is sometimes possible (see, e.g., Murzin & Finkelstein (1988)) or Ptitsyn et al. (1985)), a more practical answer is the Protein Data Bank (PDB) where all the solved and publicly available 3D structures are collected. However, using structures stored in the PDB turns a “prediction” problem into a “recognition” one: a fold cannot be recognized if the PDB does not contain an already solved analog. This limits the power of recognition. Nevertheless, there is an important advantage associated to this procedure: if the protein fold is recognized among the PDB-stored structures, one can hope to recognize also the most interesting feature of the protein, namely its function—by analogy with that of an already studied protein.