import numpy as np

import math1The theoretical value¶

The theoretical value of the helicity has been derived in the notes, and can be written as a function of the transfer matrix eigenvalues and as

The following function computes and returns it for the given values of the chain length and the Zimm-Bragg parameters and . Note that extreme values of the parameters will give raise to numerical issues (and possibly errors). Those can be avoid by taking care of edge cases, and/or by explicitly using the expressions for and to simplify the relation above.

def theoretical_zimm_bragg(N, s, sigma):

l_1 = 1 + (s - 1) / 2 + math.sqrt(((s - 1) / 2)**2 + sigma * s)

l_2 = 1 + (s - 1) / 2 - math.sqrt(((s - 1) / 2)**2 + sigma * s)

r = l_2 / l_1

theta = (l_1 - 1) / (l_1 - l_2)

theta *= 1 + r**(N + 1) - 2 / N * l_2 / (l_1 - l_2) * (1 - r**N)

theta /= 1 + (l_1 - 1) / (1 - l_2) * r**(N + 1)

return theta2The Monte Carlo Metropolis method¶

2.1Introduction¶

The model prescribes (or makes it possible to compute) the statistical weight of any chain configuration. We can leverage this knowledge to sample the available configurations in equilibrium by using a specific Monte Carlo (MC) technique known as the Metropolis method.

The discussion below is taken from the Frenkel and Smit book.

Consider the whole phase space of the system. By definition, each state is visited with a probability given by its statistical weight, . We now wish to find a way of sampling the phase space so that the equilibrium distribution, given by the weights , is retained. In order to do so we define the transition probability matrix to go from state to state , with the obvious constraint that its elements have to be such that the equilibrium distribution is conserved. A sufficient condition to fulfill such a constraint is that, for each pair ,

which is also known as “detailed balance”. The transition matrix can be decoupled as a product of two matrices,

where is the probability of proposing the change, and is the probability to accept the change, also known as “acceptance probability”. In the simple scheme we will consider the matrix to be symmetric, so that the first equation of this section can be written as

and therefore

Any choice of the elements that satisfy the above condition will sample the phase space in equilibrium (given enough simulation time). The choice made by Metropolis et al, which is by far the most common, is

In a microscopic system (i.e. a system where it is possible to specify a Hamiltonian), the statistical weight takes a simple (and famous) form:

where is the energy of configuration , so that the acceptance probability is just

where is the energy difference between the two configurations.

2.2Observables¶

In the statistical mechanics approach, a quantity of interest can be evaluated by taking an average over the ensemble of the equilibrium configurations:

where is the value of for the configuration . Since with the MC method sketched above we sample equilibrium configurations, we can approximate the above relation as

where runs over the simulation iterations. Note that in most MC schemes (like the one considered here), configurations that are “close in time” (i.e. are separated by few iterations) are correlated. As a result, it is often sufficient to update the averages every iterations rather than after each iteration.

Nota Bene: in real systems it is not possible to sample the whole phase space, but we need to simulate for long enough that enough representative states are sampled. A necessary, but by no means sufficient, condition to judge the quality of the sampling is that the average value plotted against the simulation time converges to some value, perhaps with small fluctuations.

2.3Implementation in the Zimm-Bragg model¶

Here we implement the Monte Carlo Metropolis algorithm in this way:

We start with a configuration , which has a statistical weight

We propose a new configuration , which has a statistical weight , which is identical to but for the conformation of a single residue, which is randomly chosen and flipped (

chorhc).We accept the change (i.e. the chain acquires the new configuration ) with probability

We go back to step 1, regardless of whether we accepted or rejected the flipping.

By iterating this procedure many times, we sample the phase space of the configurations. In the code below, we first run a certain amount of “Monte Carlo steps”, where each MC step is defined as iterations, where is the length of the chain, to “equilibrate” the system, i.e. to obtain a typical equilibrium configuration. After this equilibration we begin to keep track of the helicity, so that at the end of the simulation we can return its value, averaged over the ensemble of generated configurations.

We now have to discuss how to evaluate the statistical weight associated to a configuration. First of all, note that we don’t need the single statistical weights of the and configurations, but only their ratio. Moreover, in the Zimm-Bragg model the total weight of the chain can be decomposed in the product of the weights associated to each residue, viz.

where is the statistical weight of residue and depends on its state (h or c) and possibly on its interaction with its neighbours. These are all the possibilities:

is in the coil state:

is in the helical state, and the residue before is also

h:is in the helical state, but the residue before is a

c:

Since each weight is the product of the single weights associated to each residue, all the weights relative to the non-flipping residues cancel out. Therefore, if the randomly chosen residue is we have that

When computing the weight ratio, there are two components, one due to the propagation term, and one due to the cooperative (initiation) term. The former is easy to compute, as it is if the flips to h, and if it flips to c. The latter is slightly more complicated, as we have to take into account several possibilities:

if is not the first nor the last residue of the chain, there are two cases that result in a cooperative contribution, depending on the state of its two neighbours:

if the initial state of the triplet is

chcorhch, flipping the central residue decreases the number of helices by one, and therefore we get back the cooperative price and divide the weight ratio byif the initial state of the triplet is

hhhorccc, flipping the central residue increases the number of helices by one, and therefore we pay the cooperative price and multiply the weight ratio by

If is the first residue and the one that follows it is in the

cstate:if the new state is

hwe are creating a new helix, so we multiply the weight ratio byif the new state is

cwe are removing a helix, so we divide the weight ratio by

Similarly, if is the last residue and the one that precedes it is in the

cstate:if the new state is

hwe are creating a new helix, so we multiply the weight ratio byif the new state is

cwe are removing a helix, so we divide the weight ratio by

The code below checks for these alternatives in the (hopefully) clearest possible way.

def metropolis_zimm_bragg(N, s, sigma):

# initialize the chain randomly (1 for helix, 0 for coil)

chain = np.random.choice([0, 1], size=N)

# return the ratio between the statistical weights due to the flipping of the index-th residue

def change_probability(chain, index):

current_state = chain[index]

new_state = 1 - current_state

# calculate the ratio due to the propagation term

if new_state == 0:

prob = 1 / s

else:

prob = s

# and that relative to the cooperative (initiation) term

if index == 0: # first residue

if chain[index + 1] == 0:

if new_state == 1:

prob *= sigma

else:

prob /= sigma

elif index == N - 1: # last residue

if chain[index - 1] == 0:

if new_state == 1:

prob *= sigma

else:

prob /= sigma

# here we take into account two cases: either a helix is split in two or a helix is created

# in both cases we pay the cooperative price

elif new_state != chain[index - 1] and new_state != chain[index + 1]:

prob *= sigma

# here we take into account the case where the flipped residue either removes an helix, or join two helices

elif new_state == chain[index - 1] and chain[index - 1] == chain[index + 1]:

prob /= sigma

return prob

# Monte Carlo simulation

fraction_helical = 0

count = 0

for i in range(steps):

for _ in range(N):

# randomly pick a residue to flip

index = np.random.randint(N)

# calculate the probability change

prob = change_probability(chain, index)

# accept or reject the change

if prob > 1 or np.random.rand() < prob:

chain[index] = 1 - chain[index]

# skip the first steps to let the chain equilibrate

if i > steps // 10:

fraction_helical += np.mean(chain)

count += 1

return fraction_helical / count3A simple test¶

Here we test the two functions on a chain made of 20 residues, for a specific pair of and values. Feel free to play around with these values and see if what happens aligns with your intuition.

N = 100

sigma = 1e-7

s = 0.5

steps = 10000 # number of Monte Carlo steps

theta_th = theoretical_zimm_bragg(N, s, sigma)

theta_MC = metropolis_zimm_bragg(N, s, sigma)

print(f"Theoretical fraction of helical content: {theta_th}")

print(f"Numerical fraction of helical content: {theta_MC}")Theoretical fraction of helical content: 1.9599988494582937e-07

Numerical fraction of helical content: 0.0

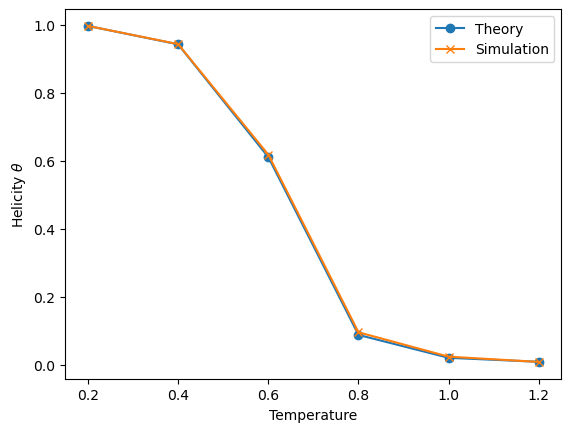

4The melting curve¶

Here I show a slightly more complicated example where we use the two functions above to evaluate the fraction of helicity of a chain as a function of temperature. Note that to make it more realistic we consider to have an enthalpic contribution, , and an entropic contribution, . If you recall, in the two-state (uncooperative) model, the melting temperature is just

Here I chose to set so that if . I then set and . Since it has an enthalpic component, is temperature-dependent, and therefore should be recomputed every time varies. This is done within the loop.

Note that I’m using arbitrary units of measurements chosen so that the most important parameters are of order 1.

import matplotlib.pyplot as plt

theta_th = []

theta_MC = []

N = 10

sigma = 1e-3

s_delta_H = -1

s_delta_S = -1

steps = 50000

Ts = (0.2, 0.4, 0.6, 0.8, 1.0, 1.2)

for temperature in Ts:

print("Evaluating temperature", temperature)

beta_f_h = (s_delta_H - temperature * s_delta_S) / temperature

s = math.exp(-beta_f_h)

theta_th.append(theoretical_zimm_bragg(N, s, sigma))

theta_MC.append(metropolis_zimm_bragg(N, s, sigma))

plt.plot(Ts, theta_th, 'o-', label="Theory")

plt.plot(Ts, theta_MC, 'x-', label="Simulation")

plt.xlabel("Temperature")

plt.ylabel(r"Helicity $\theta$") # note the r before the ", which makes it possible to use latex notation

plt.legend()

plt.show() # not strictly required in a notebookEvaluating temperature 0.2

Evaluating temperature 0.4

Evaluating temperature 0.6

Evaluating temperature 0.8

Evaluating temperature 1.0

Evaluating temperature 1.2