In biophysics, reactions (in the sense given above) are extremely important, and are often the focus of any enhanced sampling technique discussed in the context of biomolecules. However, these same techniques have been very often introduced in, or can be easily adapted to, other contexts[1].

1Collective variables and reaction coordinates¶

The phase space of a many-body system is a highly-dimensional manifold where each point corresponds to a microstate. As the system evolves in time, the collection of points in phase space it visits form a trajectory that fully describes its evolution. In many cases it is useful to project the trajectory on a space with a lower dimensionality: the resulting quantities can be used to obtain coarse-grained models, but also to describe the essential features of a system in a way that is easier to analyse and visualise.

In the context of molecular simulations, any quantity that is used to describe a system in a coarse-grained fashion is a collective variable (CV), and can be written as a function of (all or a subset of) the microscopic degrees of freedom . In condensed-matter physics, collective variables are often called order parameters. By contrast, in the biophysics and biochemistry worlds the most used term is reaction coordinate (RC), defined as a parameter or set of parameters that describe the progress of the reaction of interest, serving as a way to quantify and track the changes occurring within a system as it evolves through the reaction pathway. Since we will be focussing on reactions, in the following I will tend to refer to the coarse-grained variable(s) of interest as RC or reaction coordinate(s), but most of what I will say translates naturally to any other CV.

The primary purpose of a reaction coordinate is to provide a simplified description of the system’s thermodynamics, making it possible to monitor and analyze the progress of a reaction in terms of a single or a few variables: by using a reaction coordinate we are reducing the complexity of a many-body system with many degrees of freedom to obtain a simplified description that can be used to investigate the reaction itself, effectively applying a dimensionality reduction procedure. This simplification is essential for understanding the microscopic underpinnings of the reaction of interest. Defining a reaction coordinate makes it possible to draw a diagram such as the one shown in Figure 1, which are often called free-energy profiles or landscapes, where the variation of the free energy along a particular reaction coordinate or collective variable is plotted.

Figure 1:A sketch of the free energy landscape of a system displaying two basins separated by a transition state.

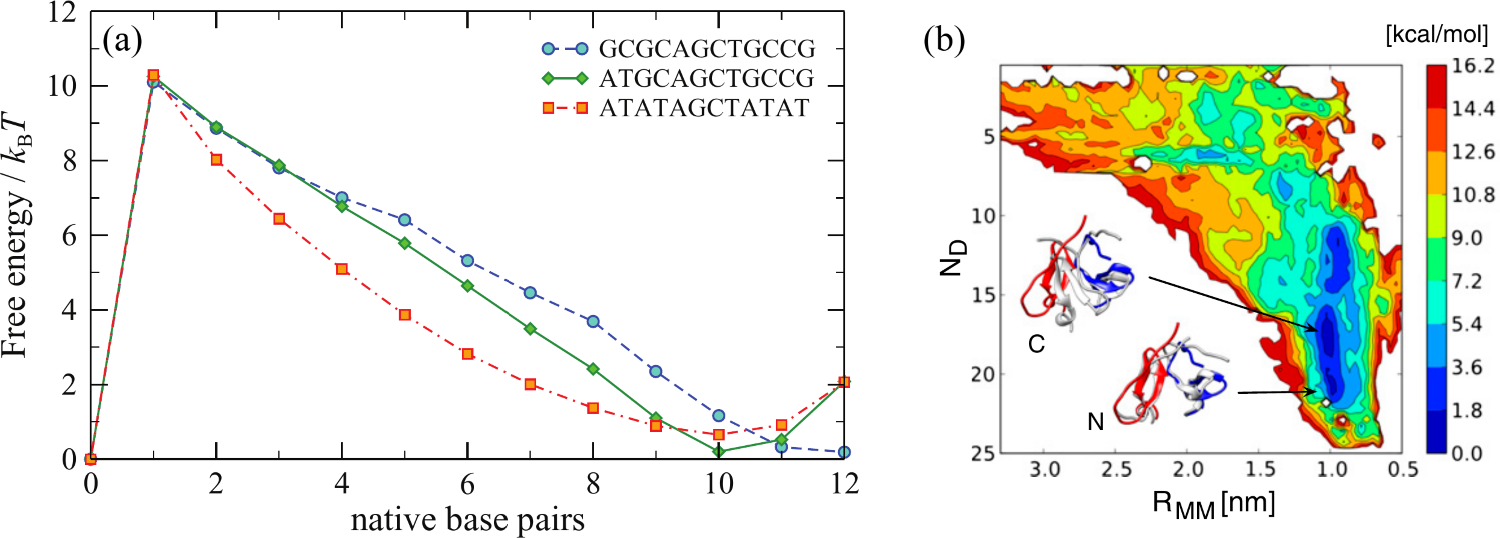

The choice of the RC depends on the specific process being studied and it is not, in general, unique. It can be a simple geometric parameter such as bond length, bond angle, or dihedral angle, or a more complex collective variable that captures the overall structural changes in the system, such as the distance between two key functional groups, the position or coordination number of a particular atom, or the solvent-accessible surface area of a biomolecule. Figure 2 shows two “real-world” examples, one taken from simulations of the oxDNA coarse-grained model, and the other from atomistic simulations of proteins.

Figure 2:(a) Free energy profiles for three different duplexes of length 12 as a function of the number of complementary (native) base pairs of the two strands. The oxDNA simulations for each duplex were run at their respective melting temperatures, namely 48 °C, 73 °C, and 80 °C. Adapted from Šulc et al. (2012). (b) Free-energy surface of the dimerization process of the fibritin foldon domain (PDB code: 1RFO). The two CVs are the distance between the centres of mass of the monomers, , and the number of specific monomer-monomer contacts, . Structures representative of the two free-energy basins N and C (gray) are compared with dimer arrangement in the trimeric experimental structure (red and blue). Adapted from Barducci et al. (2013).

Once we have chosen a RC to characterise the reaction of interest, which is in general not an easy task, and an active area of research in itself, we can use it to describe the reaction. We can formally see how if we calculate the partially-integrated partition function (see e.g. Hénin et al. (2022)) by integrating the Boltzmann factor over all the degrees of freedom at constant , viz.:

where is the Dirac delta distribution function. Note that the same procedure has been already carried out in the context of coarse graining, where the partial integration on the phase space made it possible to obtain a simplified description of the system, showing that the process of dimensionality reduction we apply is strictly the same in the two cases.

In turn, can be used to obtain a free-energy profile (sometimes called free-energy surface if is multidimensional) such as the ones presented in Figure 1 and Figure 2, which is defined as

Now consider an observable that can be written as a function of . Its ensemble average can be formally written as

which can be simplified by using Eq. (1) to

where and correspond to the minimum and maximum values of , and we have defined the marginal probability density

where we have used the partition function .

2Reactions and rare events¶

Consider a system that can switch, possibly reversibly, between two macrostates, and . Here the term macrostate is used loosely to indicate ensembles of microstates where the system resides for times that are much larger than the microscopic characteristic time; in thermodynamic parlance, and , which are sometimes called basins, should be either metastable or equilibrium states, and therefore separated by a free-energy barrier larger than the thermal energy.

In this context the free-energy barrier[2] between from , , is defined as the difference between the free energy of , and that of the transition state, , which is the highest free-energy point along the reaction pathway connecting to [3]. Note that controls not only the probability of jumping from to , but also the rate of the reaction, which is proportional to (see e.g. Eyring (1935) and Evans & Polanyi (1935)). See Figure 1 for a graphical definition of these quantities.

It is often the case that what interests us is the reaction itself rather than the and states, which are often known (and possibly have been characterised) beforehand. In this case, simulations starting from one of the two states, say , would remain in for sometime, then quickly jump to state , where it would again reside for some time before switching basin once again, and so on. If the free-energy barrier between the two basins is large (compared to ), the number of transitions from to and back will be very small. Therefore, using unbiased simulations[4] to sample the transition itself, for instance to evaluate the free-energy landscape as in Figure 1, requires a large computational effort which is mostly wasted in sampling uninteresting parts of the phase space.

In this part we will understand how the sampling of the transition from to can be enhanced by using advanced computational techniques collectively known as rare event sampling techniques, which are methods used also outside the context of molecular models (see Le Priol et al. (2024) for an interesting example on climate-change-related extreme events).

3Umbrella Sampling¶

The first technique I will present is the venerable umbrella sampling (US), which was introduced in the Seventies by Torrie and Valleau.

The basic idea behind umbrella sampling is to bias the system along a chosen reaction coordinate by adding a so-called biasing potential[5] that confines the system to different regions (also known as windows) along that coordinate. By running multiple simulations with biasing potentials centred on different points along the reaction coordinate, the entire range of interest can be sampled. After sufficient sampling is done in each window, the bias introduced by the additional potentials can be removed to obtain the unbiased free energy profile (or any other observable of interest) along the reaction coordinate.

A typical umbrella sampling simulation thus comprises several steps, which I will discuss separately.

3.1Choosing the reaction coordinate¶

This is arguably the most important step, since choosing a sub-optimal RC can sometimes massively increase the required simulation time. Fortunately, most of the times the choice is either obvious (e.g. the concentration of the product in a chemical reaction), or dictated by the observable(s) of interest (see below for an example).

3.2Selecting a biasing potential¶

The role of the biasing potential is to confine a system within a (usually rather narrow) region of the reaction coordinate. As such it must be a function of the reaction coordinate(s) only, without any explicit dependence on any of the microscopic . The most common choice is a harmonic potential, whose shape gives the method its name and usually takes the form

where is the position of the minimum of the potential and is the spring constant. Other choices are possible (see e.g. here or here for examples of biases that are not differentiable and therefore can only be used in Monte Carlo simulations).

3.3Partitioning the reaction coordinate into windows¶

Next, we need to split the range of interest, , into windows. The most common strategy is to divide the reaction coordinate into equispaced windows centred on , with , where is the total number of windows (and hence of independent simulations). The distance between two neighbouring windows, , which is often taken as a constant, should be chosen carefully: on one hand it should be as large as possible to make as small as possible; on the other hand, should be chosen so that there is some overlap between adjacent windows to prevent discontinuities in the free energy profile. This is to ensure that the neighboring windows provide sufficient sampling for accurate reweighting. An often good-enough first estimate can be made by assuming that , and then by choosing a -value for which is of the order ot , i.e. that the value of the biasing potential calculated in the midpoint separating two neighbouring windows is of the order of the thermal energy.

In practice, the number, size and spacing of the windows depends on the curvature of the free energy profile along the reaction coordinate, which is not known beforehand. Smaller windows may be needed in regions with steep gradients or large energy barriers, while larger windows may suffice in more gradually changing regions. Fortunately, given the independent nature of the simulations that run in each window, the partitioning can be improved upon a posteriori: if one realises that the explored range is not sufficient, it can be extended by adding simulations with biasing potentials centred beyond and/or . Sampling can also be improved by adding simulations in regions of the RC where the is steeper.

At the end of this procedure, each window will be assigned a biasing potential .

3.4Sampling¶

Molecular dynamics or Monte Carlo simulations are performed within each window, allowing the system to equilibrate and sample configurations consistent with the biasing potential. In general ensuring that a given window has converged is not necessarily straightforward, but can be done by techniques such as block averaging.

3.5Reweighting and combining the data¶

In the final step we gather the data from each window and combine it together to calculate the unbiased quantities of interest. I will first show how to unbias the data from each window, and then how to join all the results together.

In analogy with Eq. (5) we can defined a biased marginal probability density for the -th windows, , as

where the biased partially-integrated partition function has also been defined. We note that the biasing factor depends only on and therefore, since integration is performed on all degrees of freedom but , can be moved outside of the integral. If we do so and then multiply and divide by we obtain

where represents an unbiased ensemble average and is the marginal probability density of the -th window. Note that, being an ensemble average, does not depend on , and therefore it is a (in general unknown) constant[6]. As a consequence, we can obtain the unbiased marginal probability density up to a multiplicative constant:

where I use the symbol in place of , since the former is unnormalised and therefore not a proper probability density. This procedure is known as unbiasing, and it is a special case of histogram reweighting[7]. Applying Eq. (10) yields functions that are shifted relative to each other because of the unknown constant. The total can be recoverd by stitching together all the , utilising the regions of the -space where each pair of windows overlap significantly to find the unknown multiplying constants[8]. There are several methods available to perform this task. Here I will present two such methods: a simple least-squares fit and the (much more powerful) WHAM.

3.5.1Least-squares method¶

Consider two windows and (with ), whose unnormalised marginal probability densities overlap in a -region . We want to find the constant that, multiplying , minimises the mean-squared error between the two overlapping portions of the histograms, which is defined as

Imposing we find

In practice, with this method the 0-th window data are unchanged, while all the subsequent ones are rescaled one after the other by repeatedly applying Eq. (12). Once the final histogram is obtained, we can either normalise it (if we need a proper probability density), or use it to compute the associated free-energy profile

where I made explicit the fact that classical free energies are always specified up to an additive constant, which can be chosen freely. If we are interested in a reaction between states and , it is common to set or to 0, while for potentials of mean force (see for instance the example below) it is customary to set .

3.5.2The Weighted Histogram Analysis Method (WHAM)¶

WHAM is a widely used reweighting technique for combining data from multiple biased simulations to obtain an unbiased estimate of the free energy profile. The basic idea behind WHAM is to reweight the probability distributions obtained from each window simulation such that they are consistent with each other and with the unbiased distribution. The reweighting process involves applying a set of equations that account for the biasing potentials applied in each window and the overlap between adjacent windows.

The following derivation has been inspired by Guillaume Bouvier’s “traditional” derivation, which in turn is based on the original one.

We start by defining some accessory quantities that make it possible to generalise the method beyond a specific Umbrella Sampling to any case where one wants to join multiple overlapping histograms:

Latin letters , and will be used to index different simulations rather than windows, since one may want to run multiple simulations for the same window to improve statistics. The total number of simulations is .

The reaction coordinate is split into bins, and is the -th bin, i.e. .

is the biasing factor applied to the -th bin of the -th simulation. For the type of Umbrella Sampling described above, .

The output of simulation is the best estimate for the biased probability , which we define as , where is the number of counts in bin and is the number of generated samples, with both quantities referring to the -th simulation.

is the (unbiased) probability of bin , that is, the quantity we wish to calculate.

The biased probability of bin of simulation is then:

where is a normalising constant that ensures that , viz.

Using the above definitions, the best estimate for the unbiased probability of the -th bin of simulation is

We assume that can be written as a weighted sum of all the reweighted histograms, , as follows:

where the set of weights are normalised, e.g. . The WHAM method boils down to ensuring that the weights are chosen so as to minimise the expected variance of , which is defined as

where the average is taken over all the windows that have sampled . Defining we obtain

We note that . Assuming that simulations and are uncorrelated[9], and therefore

We can minimise the variance with respect to the set of weights subjects to the constraints by using a Lagrange multiplier . The quantity to minimise is therefore

whose derivative reads

whence we obtain

Applying the normalisation constraint we find

which can be solved for , yielding

Using this latter relation in Eq. (23) gives the optimal value for weight :

We now need to write in terms of the simulation output. Recalling that and using Eq. (16) we can write

The variance of the original histograms can be approximated by first considering that the probability of having counts in a specific histogram bin is given by the binomial distribution,

where is the probability of the event associated with the bin and is the total number of counts stored in the histogram. According to the Poisson limit theorem, in the limit of large and small [10] the binomial distribution can be approximated by the Poisson distribution

whose mean and variance are both equal to . We connect this result with our derivation by noting that the probability for bin and simulation is the biased probability , and therefore

Using this relation in Eq. (27) yields

which gives for the weights

Substituting these weights in Eq. (17) and using the fact that (see Eq. (16)) we obtain the WHAM set of equations

Note that this is a system of non-linear equations, which are complemented by the equations (15) that define the . This sytem of equations can be solved either with non-linear solvers (such as scipy’s fsolve) or iteratively, setting the to some initial values (e.g. all equal to 1), using them to evaluate the through Eq. (33), which in turn are used to update , and so on, repeating the process until convergence is achieved.

3.6A real-world example¶

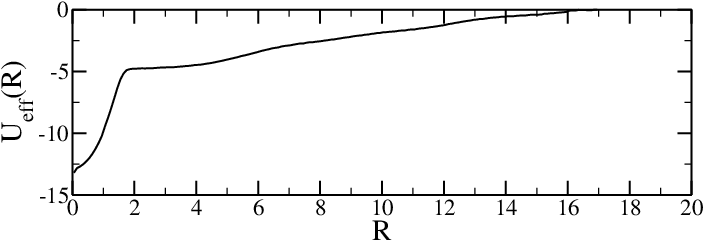

As discussed in the chapter on coarse-grained force fields, the effective interaction between two objects composed of multiple interacting units (atoms, molecules or beads) can be estimated in the dilute limit (i.e. at low density) as

where is the distance between the two objects (defined e.g. as the distance between the two centres of mass) and is the associated radial distribution function. For complicated objects composed of many parts, estimating with sufficient accuracy through means of unbiased simulations requires an ungodly amount of computation time and is therefore unfeasible. Here I show an example where this issue has been overcome by using umbrella sampling.

Using the language introduced in this section, is the reaction coordinate and the observable of interest, where is the marginal probability density.

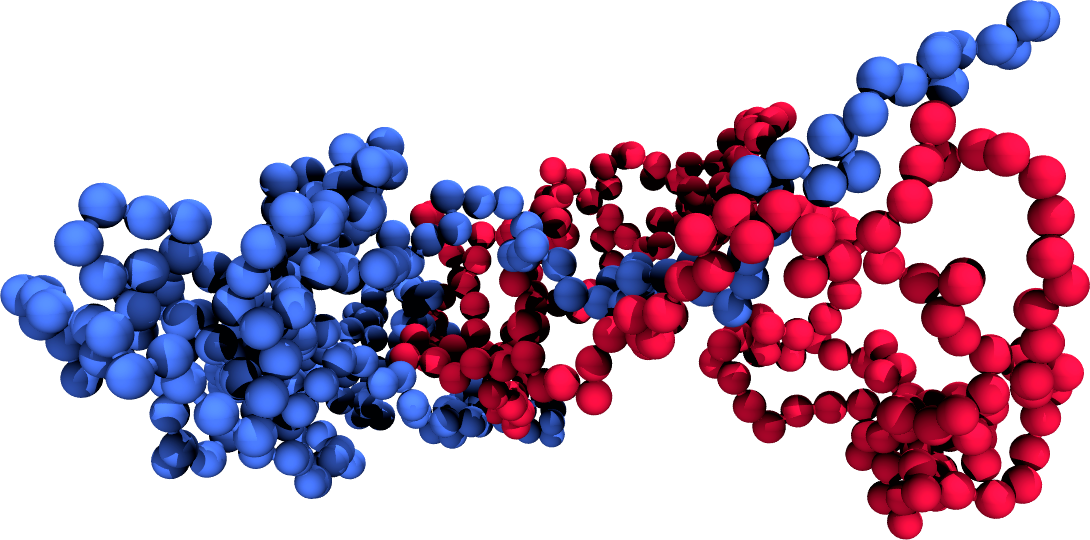

(a)A simulation snapshot showing the two chains (coloured differently).

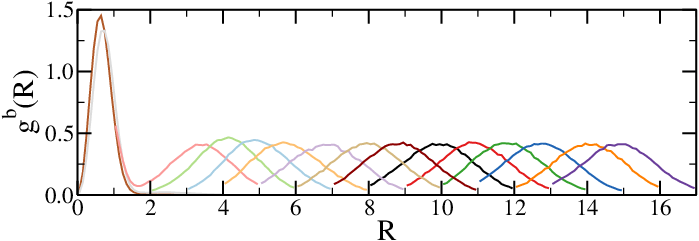

(b)The biased radial distribution functions, with each colour corresponding to a different simulation.

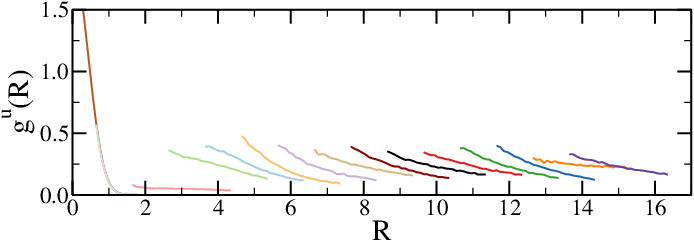

(c)The unbiased radial distribution functions, with each colour corresponding to a different simulation.

(d)The reconstructed effective interaction

Figure 3:The results of umbrella sampling simulations: the raw data is unbiased and then combined together to yield the final free-energy profile. Here the reaction coordinate is the distance between the centres of mass of two polymers.

Figure 3 shows the results of umbrella sampling simulations of a system composed of two polymer chains, where the chosen reaction coordinate is the distance between the two centres of mass, , and the final output is the effective chain-chain interaction as a function of . Figure 3a shows a snapshot of the two chains, Figure 3b shows the raw (biased) data for all the windows , and Figure 3c contains the , unbiased according to Eq. (10). Finally, Figure 3d contains the effective interaction, obtained with the WHAM method and shifted so that it vanishes at large distances.

4Metadynamics¶

Umbrella sampling is a technique that is relatively simple ot implement and works well in many real-world systems. However, it suffers from several drawbacks when applied to non-trivial systems. For instance, it scales badly with the number of collective variables, and requires a trial-and-error approach to determine the spring constants and window partitioning. Among the plethora of methods developed to overcome some (or all) of these limitations, here I will present one of the most successfull and used ones: metadynamics, which was introduced for the first time in Laio & Parrinello (2002).

In metadynamics simulations, the system is pushed away from the regions of the phase space where it would tend to reside by adding a history-dependent biasing potential. In this way, if the simulation is long enough, the system will diffuse over an almost flat biased free-energy profile. The original information (i.e. the free-energy profile of the unbiased system) can be reconstructed from the “learned” biased potential or from the histogram of the biased CVs, depending on the metadynamics flavour employed (more on this latter).

In practice, in ordinary metadynamics, after the set of collective variables has been chosen, an unbiased simulation is started (often from a configuration that is within a basin). Then, every fixed amount of time steps, the bias potential is updated as follows:

where measures time by counting the number of times that the bias potential has been updated, is the bias potential at time , is the value of the CV(s) at time , and and are two parameters which set the height and width of the gaussians that are used to progessively fill the wells of the free-energy profile. Leveraging Eq. (35), the bias potential at time can be written as

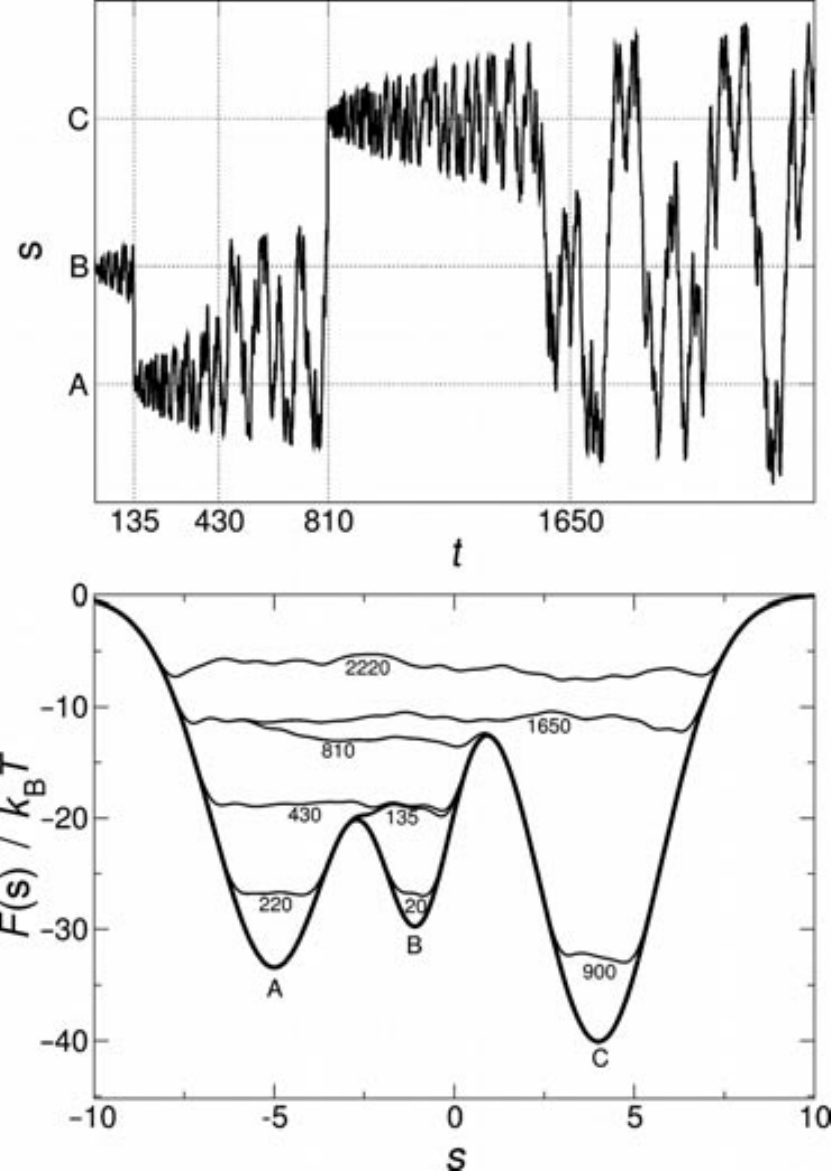

Figure 4:Example of a metadynamics simulation in a one-dimensional model potential. The time is measured by counting the number of Gaussians deposited. (Top) Time evolution of the collective variable during the simulation. (Bottom) Representation of the progressive filling of the “true” underlying (“true”) potential (thick line) by means of the Gaussians deposited along the trajectory. The sum of the underlying potential and of the metadynamics bias is shown at different times (thin lines). Taken from Barducci et al. (2011).

To see how the bias affects the exploration of the phase space of a simple system, consider the simple one-dimensional potential with three minima , , and presented in the bottom panel of Figure 4. The system is initialised to be in the local minimum , where it would most likely remain if sampled with unbiased simulations, since the barriers separating it from the other minima are larger than . However, if metadynamics is used, the deposited Gaussians make the bias potential grow, until for the system is pushed out of , moving into , which is the closest basin (in terms of barrier height). Here, the Gaussians accumulation starts anew, filling the underlying free-energy basin at . At this point, the system can freely diffuse in the region around and . Eventually (), the bias potential fills this region, and the system can easily access also the basin of . Finally, at also this minimum is compensanted by the bias, and the dynamics of the system becomes diffusive over the whole free-energy profile (see the top panel of Figure 4).

This simple example illustrates the main features of metadynamics:

It accelerates the sampling of the phase space by pushing the system away from local free-energy minima.

It make it possible to efficiently explore reaction pathways, since the system tends to escape the minima passing through the lowest free-energy saddle points.

No a priori knowledge of the landscape is required, at variance with umbrella sampling, where the optimal choice of the bias stiffness and of the number of windows depend on the local steepness of the free energy and of the CV range of interest.

The method is intrinsically parallelisable. Indeed, multiple simulations can be run to independently explore the phase space, and each simulation contributes to the bias potential, which is shared among all “walkers” (Raiteri et al. (2005)).

After a transient, the bias potential and the underlying free energy are formally connected by the following relationship

where is an immaterial constant. In reality, the long-time oscillates around the real free energy rather than converging to it, since the deposition of the Gaussians continue even when the dynamics become diffusive. Moreover, it is not always obvious when simulations should be stopped.

These two issues can be solved by well-tempered metadynamics, introduced in Barducci et al. (2008). With this variation, the height of the Gaussian to be deposited at the given point decreases exponentially with the strength of the bias potential already present in . In other words, Eq. (35) becomes

where , which has the dimension of a temperature, controls the rate at which the height decreases, which is always inversely proportional to the time spent by the simulation in the CV point where the Gaussian is to be deposited. This inverse relationship guarantees that the bias potential converges. However, in this case the relationship between the long-time potential bias and the free energy, which is given by Eq. (37) in ordinary metadynamics, becomes

which suggests that has a double role: it decreases the height of the deposited Gaussians, therefore damping the sampling fluctuations, but it also controls the effective temperature at which the CV is sampled. Ordinary metadynamics is recovered in the limit, while the limit corresponds to ordinary MD simulations.

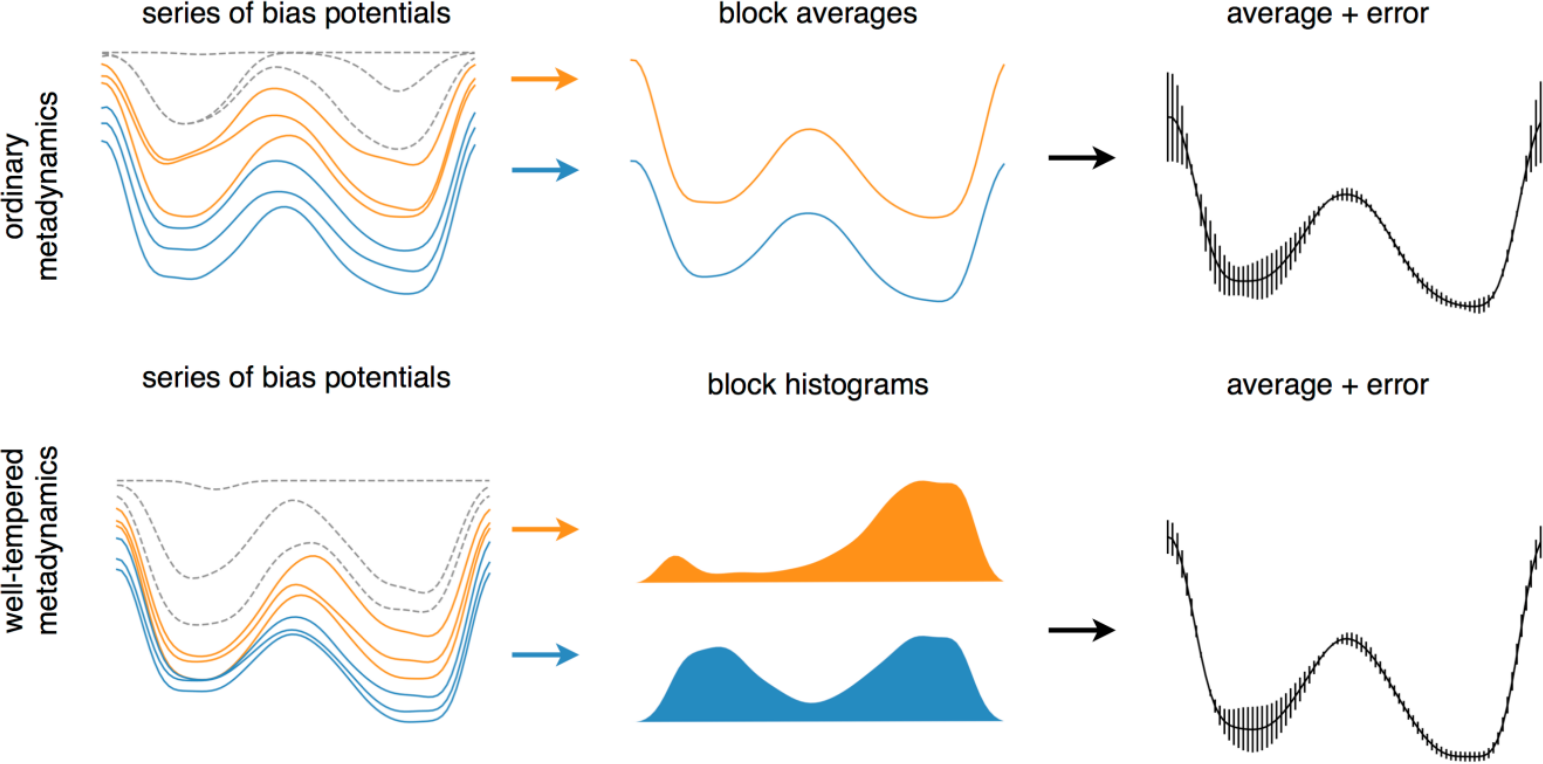

In ordinary metadynamics, after an initial (transient) time , the free-energy minima are filled with the bias potential and the free-energy profile is essentially flat. However, does not converge to the free energy since the process of Gaussian depositing never stops. However, the profile of the bias potential retains, on average, the same shape. Therefore, the best estimator for the free-energy profile at time is the time-average of rather than its its instantaneous value[11], e.g.:

The error associated to Eq. (40) can be estimated by using a block analysis. With this technique, the equilibration part of the simulation is discarded, and the rest is split in blocks. The standard error associated to the average bias potentials of the blocks is computed as a function of . If is sufficiently long, the error estimate will be approximately independent of the number of the blocks, and therefore can be used to assess the statistical accuracy of the free energy profile.

Figure 5:Schematic representation of error calculation in ordinary (upper) and well-tempered (lower) metadynamics. The initial part of the simulations (dashed profiles) sould always be discarded, while block analysis is applied over the rest to estimate the final free-energy profile. For ordinary and well-temperered metadynamics, the block analysis is performed on the bias potentials and on the histograms of the biased CVs, respectively. Taken from the Supplemetary Information of Bussi & Laio (2020).

This procedure is sketched in the top part of Figure 5.

In well-tempered metadynamics, the final free-energy profile can be extracted from the final bias potential by exploiting Eq. (39). However, a better way, which also makes it possible to estimate the bin-wise error, is to perform a block analysis (described above) to the histogram of the biased CV . With this analysis, Eq. (39) is replaced by[12]

where the fact that we are dealing with histograms is made explicit by the subscript. The error on the free energy of bin is the standard error associated to the histogram block average converted to the error on the free-energy which, following Bussi & Laio (2020), can be written as

where is the block index and the variance is computed across all blocks. This procedure is presented in the bottom part of Figure 5.

5Thermodynamic & Hamiltonian integration¶

The rare-event methods discussed above make it possible to map the free energy profile along one or more reaction coordinates, and to characterise the free-energy barriers and intermediates that separate the (meta)stable states. However, in many cases we are interested only in the free-energy difference between two states that can be connected by a reversible transformation (e.g., turning off interactions, changing the positions of nature of atoms or molecules, changing the thermodynamic parameters such as temperature or density).

Here I will give a brief introduction to numerical techniques that address this challenge by introducing a structured approach to systematically connect different states of a system. Both methods rest on the same idea: free energy differences can be obtained by “transforming” one state into another while carefully accounting for the changes encountered along the way. Instead of attempting to capture the full complexity of a system’s phase space in a single calculation, these methods break the problem into manageable steps, enabling precise and controlled exploration of the underlying thermodynamics.

5.1Thermodynamic integration¶

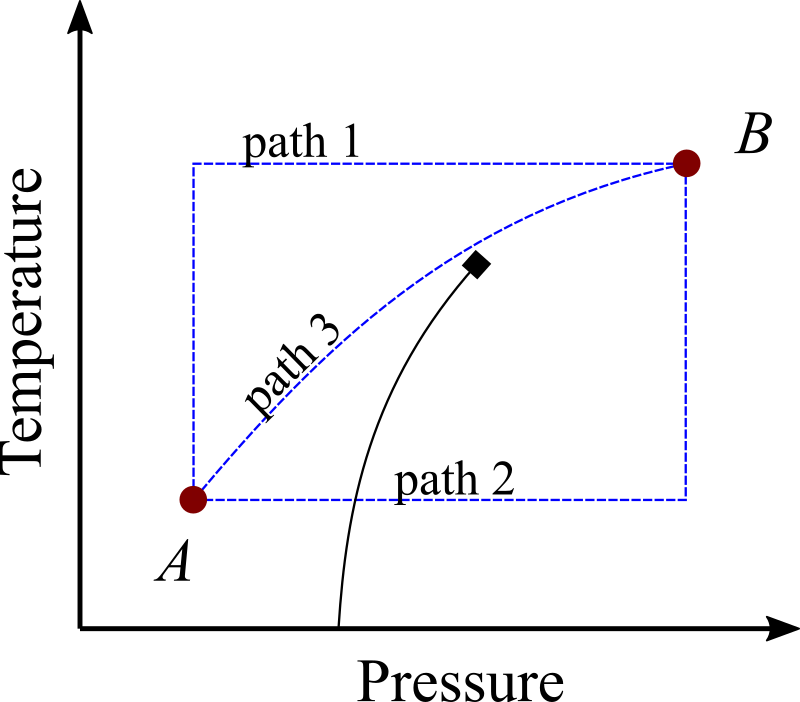

Given two distinct states and differing for their thermodynamic conditions (temperature, pressure, etc.), the thermodynamic integration (TI) method makes it possible to compute their free energy difference, . The idea is to define a reversible path in the space of the thermodynamic variables that connects and [13], and then run simulations along this path to estimate the derivatives of the free energy that can be integrated to yield the free energy difference, since

where is the thermodynamic variable that varies along the path. In reality, the integral in Eq. (43) is computed by discretising the interval into the values , running one or more simulations for each , and then applying a method to approximate a definite integral, such as the trapezoidal rule.

Figure 6:A phase diagram featuring a gas-liquid phase transition ending in a critical point (marked with a diamond). The two points and are connected by three paths (blue dashed lines). Two paths (1 and 3) are reversible, but in path 3 both and vary simultaneously, making it less useful for TI applications. By contrast, path 2 crosses the phase transition, and therefore it is not reversible.

Figure 6 shows examples of reversible and irreversible paths in a phase diagram. Although paths 1 and 3 are both reversible and therefore in principle, amenable to TI, in practice it is more convenient to change a single thermodynamic variable at a time as done in path 1.

For one-component systems, common thermodynamic variables are temperature , pressure , number of particles , volume , density , and a state is defined by two thermodynamic variables (often either , ) or )). The integration can follow three directions:

Integration along isotherms: is constant, varies. The Helmholtz free energy difference is given by

where the integrand can be obtained e.g. with canonical simulations.

Integration along isobars: is constant, varies. The Gibbs free energy difference is given by

where is the enthalpy, which can be evaluated with isobaric-isothermal () simulations.

Integration along isochores: is constant, varies. The Helmholtz free energy difference is given by

where the integrand can be obtained with canonical simulations.

5.2Hamiltonian integration¶

With Hamiltonian integration (HI), it is possible to estimate the free energy difference between systems that are in the same thermodynamic state but have different Hamiltonians, and . This is accomplished by introducing a new Hamiltonian that uses a parameter to interpolate between and . The most common form used in HI is

so that and . The free energy of the system becomes a function of , viz.

where is the “molecular” partition function (which includes the momenta contributions). Deriving Eq. (48) with respect to yields

which can be integrated to obtain the free energy difference between and :

Note that the above equation is formally identical if is kept fixed instead of , i.e. in the isobaric-isothermal ensemble.

5.3A complete example¶

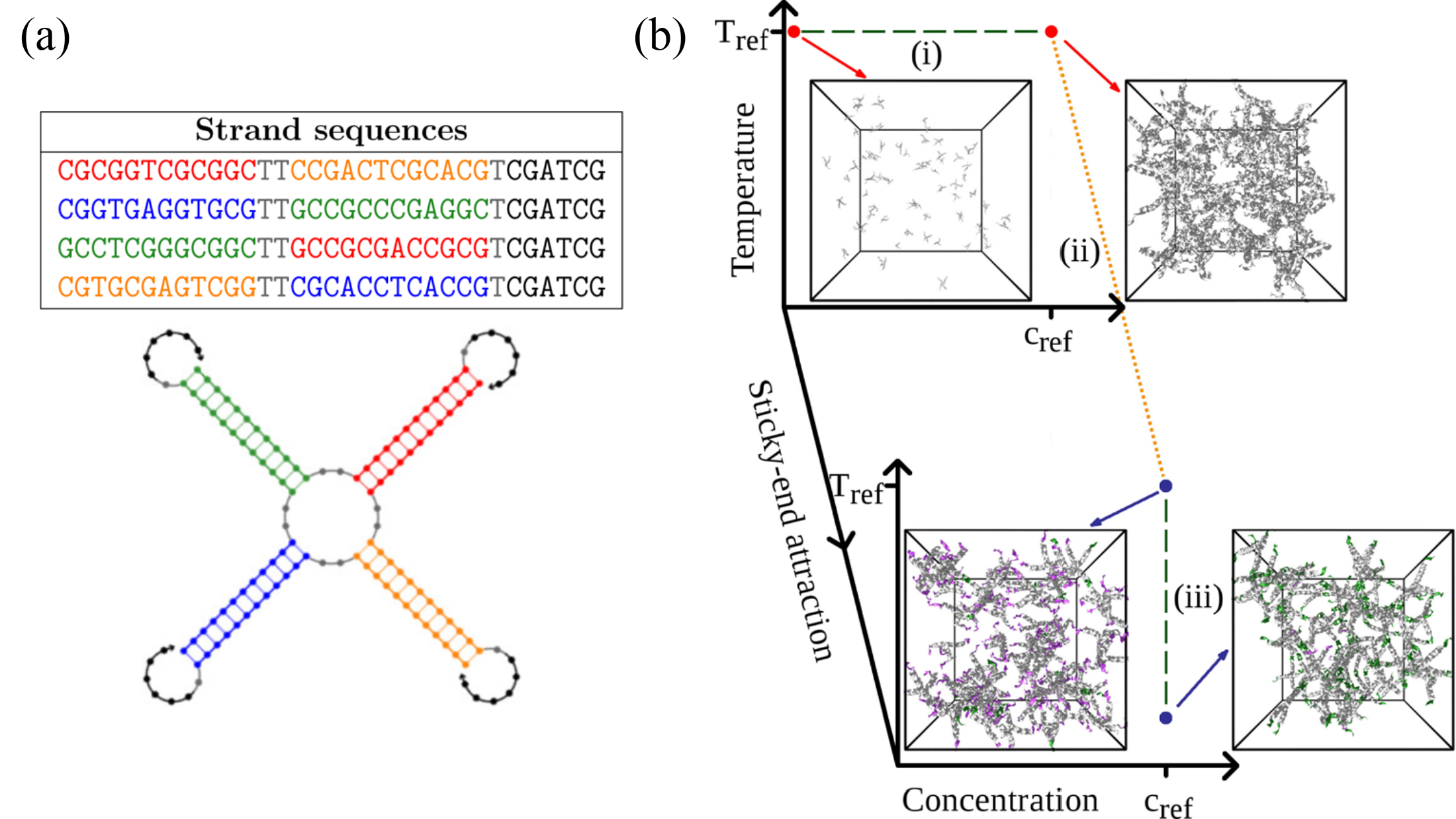

Advances in materials science and nanotechnology show that the specificity of the Watson-Crick mechanism offers numerous possibilities for realizing nano- and mesoscopic supramolecular constructs entirely made up of DNA. These can be used as testing grounds for theoretical predictions (e.g. Biffi et al. (2013)), to build novel materials and devices for technological applications (e.g. Liu et al. (2024)), but also as a biophysical tool (see e.g. Biffi et al. (2013) and Samanta et al. (2024)).

A simple but powerful framework to use DNA to build materials is provided by DNA nanostars, which are DNA constructs composed of a certain number of arms ending with sticky ends, whose collective phase behavior has been investigated both experimentally and numerically investigated. In Rovigatti et al. (2014), we have used oxDNA to numerically demonstrate that the ground state[14] of tetravalent DNA nanostars in solution is a disordered state (a “liquid”) rather than a crystal, which is a rather surprising result.

Figure 7:(a) The sequence used to build tetravalent DNA nanostars, and a sketch of the secondary structure of a single nanostar. (b) Cartoon of the protocol employed to compute the free energy of the fluid phase of a system made of tetravalent DNA nanostars. The path connecting the initial and final states comprises thermodynamic integration parts (green dashed lines), and a Hamiltonian integration part (orange dotted line) that connects a system of tetramers with switched-off sticky ends to the fully interacting fluid. The snapshots show typical configurations at the marked state points. Here, DNA strands are colored according to the following scheme: DNA sequences interacting only through excluded volume are in gray, unbound sticky ends are in violet, and bound ones are in green. Adapted from Rovigatti et al. (2014).

The sequences and secondary structure of the strands composing the nanostars is shown in Figure 7(a), while the thermodynamic/Hamiltonian path used to evaluate the free energy of the liquid state is shown in Figure 7(b). The integration starts at high temperature and low density, where the system is well approximated by an ideal gas, for which we have an analyitical expression for the free energy. Here we used a modified Hamiltonian in which the sticky ends that provide inter-nanostar bonding have been disabled by setting . We then used Eq. (44) to obtain the free energy of a high- liquid at the target density, and then HI to obtain the free energy of a high- system with interacting sticky ends (). Finally, we used Eq. (46) to evaluate the free energy of the system at low temperature, where essentially all inter-nanostar bonds are formed and the system is very close to its classical ground state.

In order to demonstrate that the liquid state has a lower free energy than the crystal, we used a particular HI technique, called Einstein crystal and described thoroughly in Vega et al. (2008), to evaluate the free energy of the latter. This method is a bit out of scope here, but if you are interested you can read our paper to find out what we did.

6Alchemical free-energy calculations¶

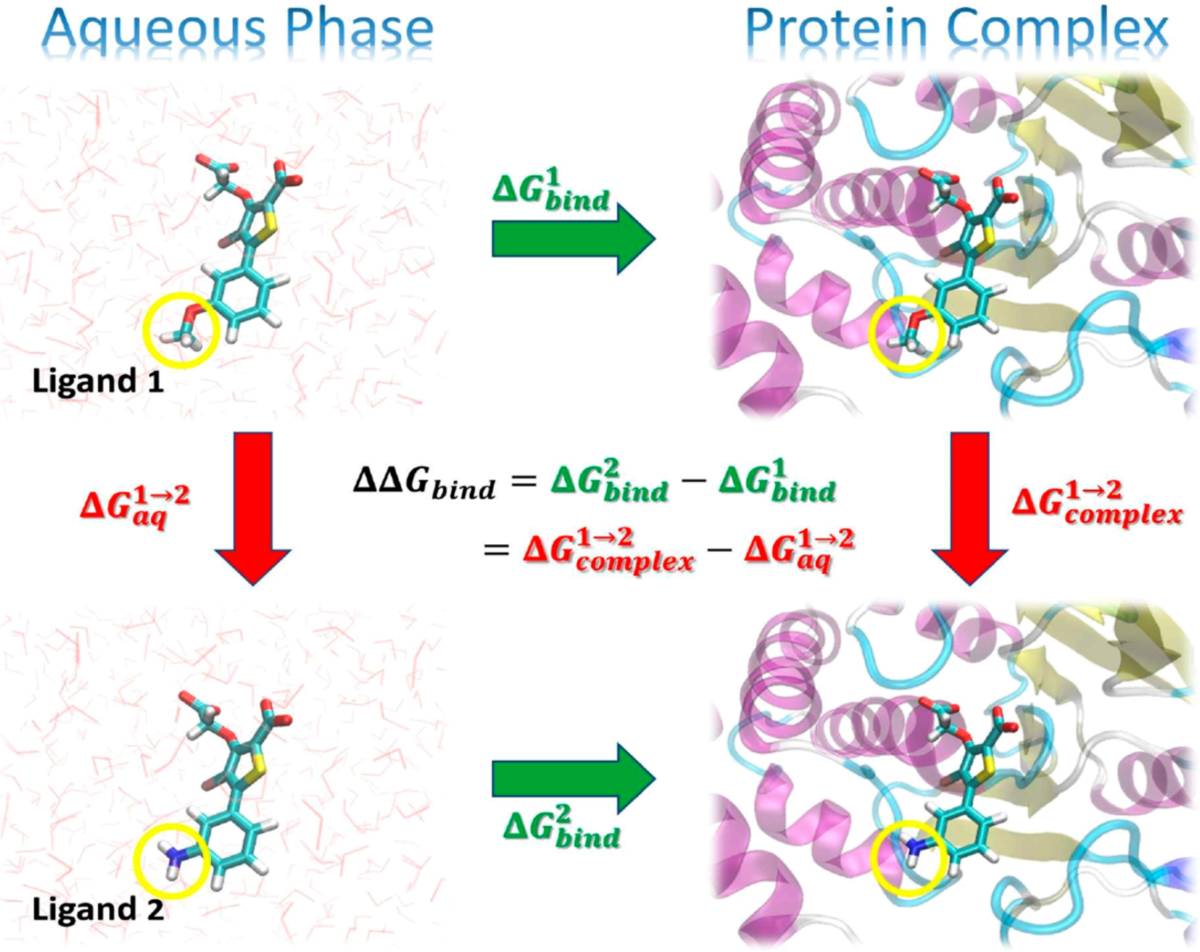

In chemistry and biophysics, it is common to employ so-called alchemical free energy (AFE) calculations to quantify free energy differences between two thermodynamic states. The basic idea is the same underlying Hamiltonian integration. Indeed, the term “alchemical” refers to the use of nonphysical transformations, akin to the transmutation of elements imagined by medieval alchemists, to connect two states by parametrising the transition through a coupling variable varying between 0 to 1. In biophysics, AFE calculations are applied to diverse problems, such as determining solvation free energies, calculating relative binding affinities, and studying the effects of mutations in biomolecules.

Figure 8:Illustration of a thermodynamic cycle for the relative binding free energy, , between two ligands (“Ligand 1” and “Ligand 2”). Taken from York (2023).

One common application is shown in Figure 8. In the figure, the green arrows represent the absolute binding free energy, , of each ligand (indicated by the superscript), which involves changing the environment from unbound in the aqueous phase to bound in a complex with the protein target. These quantities are experimentally measurable but are challenging to directly compute, as the change in the environment can be considerably complicated. The red arrows represent alchemical transformations where Ligand 1 is mutated into a similar Ligand 2 in the same environment. These transformations are frequently more amenable to practical computations. The yellow circles in the figures indicate the region of each ligand that undergoes the most significant changes in the alchemical transformation and would likely be modeled using a “softcore potential” during the alchemical transformation.

There are three principal methodologies underpin AFE calculations, each offering distinct approaches to determining free energy differences. Here I want to introduce them very briefly, so that the reader is aware of their existence.

6.1Hamiltonian integration¶

As discussed above, free energy changes are obtained by integrating the thermodynamic derivative along the alchemical pathway. This approach requires simulations at several discrete points, where the average derivative is computed. Numerical integration over these points yields the total free energy difference. HI is known for its robustness and straightforward implementation but can be computationally intensive due to the need for dense sampling along the coordinate.

6.2Free Energy Perturbation (FEP)¶

FEP directly estimates the free energy difference between states based on the overlap of their phase spaces. The idea is to run simulations at values of , so that the total free energy change is

The free-energy difference between neighboring states is estimated using the exponential averaging of the energy difference (the so-called Zwanzig equation), where the average can be performed in the , or in both systems, so that there are three possible ways of computing :

where , is the Fermi function, and is an arbitrary constant. In principle, FEP can calculate free energy changes by sampling only the end states, and . However, if the sampled configurations from poorly represent those of , the ensemble average

6.3Nonequilibrium Work (NEW) methods¶

Nonequilibrium Work (NEW) methods provide a distinct approach to alchemical free energy calculations, departing from traditional equilibrium-based techniques. These methods calculate free energy differences by performing rapid transformations between thermodynamic states, where the system is intentionally driven out of equilibrium. At the heart of these calculations lies the Jarzynski equality, a foundational equation in nonequilibrium statistical mechanics. The Jarzynski equality is expressed as:

where is the work done during the transformation, and the overline denotes an ensemble average over multiple realizations of the nonequilibrium process. Using the chain rule,

where is the duration of the transformation. The Jarzynski equality equality reveals that the free energy difference between two states can be computed from an ensemble of nonequilibrium work measurements, even if the system does not equilibrate during the transformation.

In practice, the transformation is performed by smoothly varying the coupling parameter from 0 to 1 over a finite time , which generates a trajectory where the work done on the system is recorded. A series of such trajectories is then used to compute the exponential average of , providing an estimate of .

Unlike equilibrium methods that require exhaustive sampling across several windows, NEW simulations focus on generating multiple rapid trajectories, each starting from a well-equilibrated initial state. These trajectories can be run independently, allowing for significant parallelization and reduced wall-clock time. However, the accuracy of NEW methods depends on the careful sampling of initial conditions and the balance between transformation speed and system relaxation. Extremely fast transformations may lead to poor convergence, as large deviations from equilibrium can bias the results.

To provide an example that you can already appreciate, the bottom-up effective interactions discussed in the coarse graining chapter can be computed with any of the techniques discussed in this chapter.

Sometimes also called activation (free) energy.

Note that, per this definition, .

Unbiased simulations are “regular” simulations, where the system evolves according to the fundamental laws of physics (Newton’s laws of motion in this context), without any artificial bias or constraints imposed.

The bias often takes the form of a harmonic potential whose shape, resembling an umbrella, gives the method its name.

This constant will take different values in different windows, since it is an ensemble average of a window-dependent quantity, .

Under certain conditions, histograms computed with some parameters (e.g. temperature or chemical potential) can be reweighted to obtain the same quantity for some other (usually nearby) values of the same parameters, without having to run additional simulations.

Both assumptions are reasonable for most real-world examples, since should be large to have a good statistics, and should be small in a well-sampled, biased simulation, where many bins should have non-zero counts.

As noted in Bussi & Laio (2020), this is similar to a regular observable in an unbiased simulation, which converges to some specific average value after a certain equilibration time, but whose instantaneous value does not have any macroscopic meaning.

Here we reweight the histogram, as you would do with ordinary umbrella sampling histograms.

by definition, the free energy difference between two states do not depend on the path connecting them, as long as such transformation is reversible.

Here ground state is to be meant classically as the equilibrium state of the system as .

- Šulc, P., Romano, F., Ouldridge, T. E., Rovigatti, L., Doye, J. P. K., & Louis, A. A. (2012). Sequence-dependent thermodynamics of a coarse-grained DNA model. The Journal of Chemical Physics, 137(13). 10.1063/1.4754132

- Barducci, A., Bonomi, M., Prakash, M. K., & Parrinello, M. (2013). Free-energy landscape of protein oligomerization from atomistic simulations. Proceedings of the National Academy of Sciences, 110(49). 10.1073/pnas.1320077110

- Hénin, J., Lelièvre, T., Shirts, M. R., Valsson, O., & Delemotte, L. (2022). Enhanced Sampling Methods for Molecular Dynamics Simulations [Article v1.0]. Living Journal of Computational Molecular Science, 4(1), 1583. 10.33011/livecoms.4.1.1583

- Eyring, H. (1935). The Activated Complex in Chemical Reactions. The Journal of Chemical Physics, 3(2), 107–115. 10.1063/1.1749604

- Evans, M. G., & Polanyi, M. (1935). Some applications of the transition state method to the calculation of reaction velocities, especially in solution. Transactions of the Faraday Society, 31, 875. 10.1039/tf9353100875

- Le Priol, C., Monteiro, J. M., & Bouchet, F. (2024). Using rare event algorithms to understand the statistics and dynamics of extreme heatwave seasons in South Asia. Environmental Research: Climate, 3(4), 045016. 10.1088/2752-5295/ad8027

- Torrie, G. M., & Valleau, J. P. (1977). Nonphysical sampling distributions in Monte Carlo free-energy estimation: Umbrella sampling. Journal of Computational Physics, 23(2), 187–199. 10.1016/0021-9991(77)90121-8

- Rovigatti, L., Gnan, N., Parola, A., & Zaccarelli, E. (2015). How soft repulsion enhances the depletion mechanism. Soft Matter, 11(4), 692–700. 10.1039/c4sm02218a

- Virnau, P., & Müller, M. (2004). Calculation of free energy through successive umbrella sampling. The Journal of Chemical Physics, 120(23), 10925–10930. 10.1063/1.1739216

- Bartels, C., & Karplus, M. (1997). Multidimensional adaptive umbrella sampling: Applications to main chain and side chain peptide conformations. Journal of Computational Chemistry, 18(12), 1450–1462. https://doi.org/10.1002/(sici)1096-987x(199709)18:12<;1450::aid-jcc3>3.0.co;2-i

- Bartels, C., & Karplus, M. (1998). Probability Distributions for Complex Systems: Adaptive Umbrella Sampling of the Potential Energy. The Journal of Physical Chemistry B, 102(5), 865–880. 10.1021/jp972280j

- Kumar, S., Rosenberg, J. M., Bouzida, D., Swendsen, R. H., & Kollman, P. A. (1992). THE weighted histogram analysis method for free‐energy calculations on biomolecules. I. The method. Journal of Computational Chemistry, 13(8), 1011–1021. 10.1002/jcc.540130812

- Barducci, A., Bonomi, M., & Parrinello, M. (2011). Metadynamics. WIREs Computational Molecular Science, 1(5), 826–843. 10.1002/wcms.31

- Bussi, G., & Laio, A. (2020). Using metadynamics to explore complex free-energy landscapes. Nature Reviews Physics, 2(4), 200–212. 10.1038/s42254-020-0153-0

- Laio, A., & Parrinello, M. (2002). Escaping free-energy minima. Proceedings of the National Academy of Sciences, 99(20), 12562–12566. 10.1073/pnas.202427399